在通过:

【整理】关于抓取网页,分析网页内容,模拟登陆网站的逻辑/流程和注意事项

了解了抓取网页的一般流程之后,加上之前介绍的:

【总结】浏览器中的开发人员工具(IE9的F12和Chrome的Ctrl+Shift+I)-网页分析的利器

应该就很清楚如何利用工具去抓取网页,并分析源码,获得所需内容了。

下面,就来通过实际的例子来介绍,如何通过Python语言,实现这个抓取网页并提取所需内容的过程:

假设我们的需求是,从我(crifan)的Songtaste上的页面:

http://www.songtaste.com/user/351979/

先抓取网页的html源码,然后再提取其中我的songtaste上面的名字:crifan

对应的html代码为:

1 | <h1 class="h1user">crifan</h1> |

此任务,相对很简单。下面就来说说,如何用C#来实现。

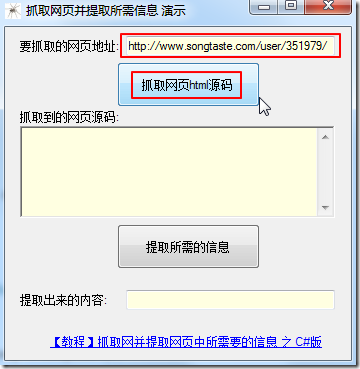

新建一个C#项目,使用.NET Framework 2.0,设置一些基本的控件用于显示。

相关的,先写出,获得html的代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | using System.Net;using System.IO;//step1: get html from urlstring urlToCrawl = txbUrlToCrawl.Text;//generate http requestHttpWebRequest req = (HttpWebRequest)WebRequest.Create(urlToCrawl);//use GET method to get url's htmlreq.Method = "GET";//use request to get responseHttpWebResponse resp = (HttpWebResponse)req.GetResponse();string htmlCharset = "GBK";//use songtaste's html's charset GB2312 to decode html//otherwise will return messy codeEncoding htmlEncoding = Encoding.GetEncoding(htmlCharset);StreamReader sr = new StreamReader(resp.GetResponseStream(), htmlEncoding);//read out the returned htmlstring respHtml = sr.ReadToEnd();rtbExtractedHtml.Text = respHtml; |

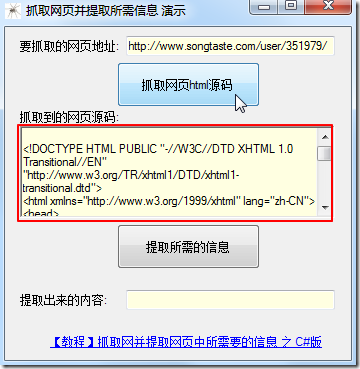

对应的,UI中,点击按钮“抓取网页html源码”:

可以获得对应的html了:

注意:

此处,需要根据你的需要,而决定是否关心html的编码类型(charset);

以及,此处为何使用GBK的编码,不了解的均可参考:

【整理】关于HTML网页源码的字符编码(charset)格式(GB2312,GBK,UTF-8,ISO8859-1等)的解释

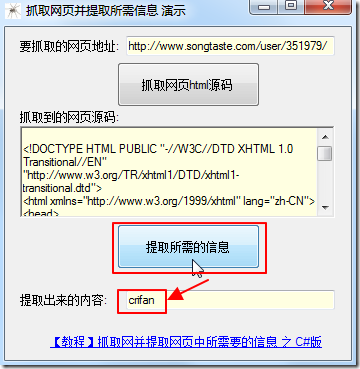

然后获得了html之后,再去通过C#中的正则表达式库函数,Regex,去提取出我们想要的数据:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | using System.Text.RegularExpressions;//step2: extract expected info//<h1 class="h1user">crifan</h1>string h1userP = @"<h1\s+class=""h1user"">(?<h1user>.+?)</h1>";Match foundH1user = (new Regex(h1userP)).Match(rtbExtractedHtml.Text);if (foundH1user.Success){ //extracted the expected h1user's value txbExtractedInfo.Text = foundH1user.Groups["h1user"].Value;}else{ txbExtractedInfo.Text = "Not found h1 user !";} |

点击“提取所需的信息”,即可提取出我们要的h1user的值crifan:

对应的完整的C#代码为:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 | using System;using System.Collections.Generic;using System.ComponentModel;using System.Data;using System.Drawing;using System.Text;using System.Windows.Forms;using System.Net;using System.IO;using System.Text.RegularExpressions;namespace crawlWebsiteAndExtractInfo{ public partial class frmCrawlWebsite : Form { public frmCrawlWebsite() { InitializeComponent(); } private void btnCrawlAndExtract_Click(object sender, EventArgs e) { //step1: get html from url string urlToCrawl = txbUrlToCrawl.Text; //generate http request HttpWebRequest req = (HttpWebRequest)WebRequest.Create(urlToCrawl); //use GET method to get url's html req.Method = "GET"; //use request to get response HttpWebResponse resp = (HttpWebResponse)req.GetResponse(); string htmlCharset = "GBK"; //use songtaste's html's charset GB2312 to decode html //otherwise will return messy code Encoding htmlEncoding = Encoding.GetEncoding(htmlCharset); StreamReader sr = new StreamReader(resp.GetResponseStream(), htmlEncoding); //read out the returned html string respHtml = sr.ReadToEnd(); rtbExtractedHtml.Text = respHtml; } private void btnExtractInfo_Click(object sender, EventArgs e) { //step2: extract expected info //<h1 class="h1user">crifan</h1> string h1userP = @"<h1\s+class=""h1user"">(?<h1user>.+?)</h1>"; Match foundH1user = (new Regex(h1userP)).Match(rtbExtractedHtml.Text); if (foundH1user.Success) { //extracted the expected h1user's value txbExtractedInfo.Text = foundH1user.Groups["h1user"].Value; } else { txbExtractedInfo.Text = "Not found h1 user !"; } } private void lklTutorialUrl_LinkClicked(object sender, LinkLabelLinkClickedEventArgs e) { System.Diagnostics.Process.Start(tutorialUrl); } }} |

完整的VS2010的项目,可以去这里下载:

crawlWebsiteAndExtractInfo_csharp_2012-11-07.7z

【总结】

总的来说,使用C#抓取网站,从返回的html源码中提取所需内容,相对之前的Python,还是要复杂一些的。

因为要手动处理很多和http相关的request,response,以及stream,编码类型等内容。

转载请注明:在路上 » 【教程】抓取网并提取网页中所需要的信息 之 C#版