【问题】

用C#去抓取Amazon的信息。

此处是从利用AWS的api,从amazon服务器获得信息。

之前已经出现多次,在获得HttpWebResponse之后,用StreamReader去ReadLine,然后死掉了。

所以,结果代码已经改为这样了:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 | XmlDocument xmlDocNoXmlns = awsReqUrlToXmlDoc_noXmlns(awsReqUrl); /* * [Function] * aws request url to xml doc, no xmlns version * [Input] * aws request url * * [Output] * xml doc, no xmlns * * [Note] */ public XmlDocument awsReqUrlToXmlDoc_noXmlns(string awsReqUrl) { XmlDocument xmlDocNoXmlns = new XmlDocument(); //string respHtml = crl.getUrlRespHtml_multiTry(awsReqUrl, maxTryNum:20); string respHtml = _getUrlRespHtml_multiTry_multiTry(awsReqUrl, maxTryNum:100); string xmlnsStr = " xmlns=\"" + awsNamespace + "\""; //"http://webservices.amazon.com/AWSECommerceService/2011-08-01" string xmlNoXmlns = respHtml.Replace(xmlnsStr, ""); if (!string.IsNullOrEmpty(xmlNoXmlns)) { xmlDocNoXmlns.LoadXml(xmlNoXmlns); } else { //special: //when request too much and too frequently, maybe errror, such as when : //return empty respHtml gLogger.Debug("can not get valid respHtml for awsReqUrl=" + awsReqUrl); } return xmlDocNoXmlns; } //just for: //sometime, getUrlRespHtml_multiTry from amazon will fail, no response //->maybe some network is not stable, maybe amazon access frequence has some limit //-> so here to wait sometime to re-do it private string _getUrlRespHtml_multiTry_multiTry(string awsReqUrl, int maxTryNum = 10) { string respHtml = ""; for (int tryIdx = 0; tryIdx < maxTryNum; tryIdx++) { respHtml = crl.getUrlRespHtml_multiTry(awsReqUrl, maxTryNum: maxTryNum); if (respHtml != "") { break; } else { //something wrong //maybe network is not stable //so wait some time, then re-do it System.Threading.Thread.Sleep(200); //200 ms } } return respHtml; } // valid charset:"GB18030"/"UTF-8", invliad:"UTF8" public string getUrlRespHtml(string url, Dictionary<string, string> headerDict, string charset, Dictionary<string, string> postDict, int timeout, string postDataStr) { string respHtml = ""; //HttpWebResponse resp = getUrlResponse(url, headerDict, postDict, timeout); HttpWebResponse resp = getUrlResponse(url, headerDict, postDict, timeout, postDataStr); //long realRespLen = resp.ContentLength; if (resp != null) { StreamReader sr; Stream respStream = resp.GetResponseStream(); if ((charset != null) && (charset != "")) { Encoding htmlEncoding = Encoding.GetEncoding(charset); sr = new StreamReader(respStream, htmlEncoding); } else { sr = new StreamReader(respStream); } try { //respHtml = sr.ReadToEnd(); while (!sr.EndOfStream) { respHtml = respHtml + sr.ReadLine(); } respStream.Close(); sr.Close(); resp.Close(); } catch (Exception ex) { //【未解决】C#中StreamReader中遇到异常:未处理ObjectDisposedException,无法访问已关闭的流 //System.ObjectDisposedException respHtml = ""; } } return respHtml; } |

目的就是为了,在出错的时候,可以最大100次的再去尝试。并且每出错一次,都还会加上一个sleep。

所以,按理来说,在网络只要不断的情况下,总会正常运行,而正常获得对应的html的。

但是,此处,又遇到一个情况是:

在:

1 | respHtml = respHtml + sr.ReadLine(); |

挂掉了,是那种,无限期的挂掉,几十秒,甚至好多分钟,都没有响应的。

由于不是在getUrlResponse期间,所以也不存在timeout的问题。

即,即使想通过超时去判断ReadLine,但是由于不存在此机制,也没法去操作。

导致结果就是,最后还是无法得到html,还是在ReadLine处死掉。

希望能找到更加有效的解决办法。

【解决过程】

1.这人:

遇到和我类似的问题,也是死在ReadLine,且是没有任何错误的死掉。

2.之前是用ReadToEnd的,但是也是类似现象:

死在ReadToEnd,没有任何错误提示,就是无限期的挂掉。

感觉像是:

此时,所要读取的东西,是没有end结束的,所以会一直去读。

然后挂掉。

3.查了下官网:

其中也有提到:

| ReadToEnd assumes that the stream knows when it has reached an end. For interactive protocols in which the server sends data only when you ask for it and does not close the connection, ReadToEnd might block indefinitely because it does not reach an end, and should be avoided. |

但是,此处,本身是从amazon服务器中获取html,然后接着去一点点读出来所要的内容, 不涉及到 服务器问我要数据,我没给,然后就去read,而导致无限期死掉的。

再说,此时也没用ReadToEnd,而只是用的ReadLine

4.另外,也想到一点:

本身用浏览器,去访问amazon的时候,有时候,就会出现:

想要打开某个页面,但是死掉,一直挂掉,无法打开页面的。

然后也是手动终止,然后重新打开,然后就,立刻的,可以打开该页面了。

而此情况,倒是和代码中的,有点类似:

在某个时刻,访问amazon的时候,结果会挂掉。

重新连接,就可以了。

5.之前看过有个什么BeginRead的东西。打算试试,看看会不会解决这里的问题。

至少希望能加上timeout,当超时了,自动放弃此次连接。然后重新连接。希望可以解决问题。

找到了:

1 | Stream respStream = resp.GetResponseStream(); |

之后,对于Stream的respStream是有个BeginRead和EndRead。

但是发现貌似也不太好用。暂时放弃此条路。

6.后来关于ReadLine和ReadToEnd,有人对了对比:

但是其一直把ReadLine说成ReadToLine,也忒坑爹了。。。

7.现在还是想办法,让StreamReader去支持Timeout。

这里:

Building a StreamReader timeout

也遇到此问题,但是没有答案。

8.看了这人:

的解释,发现自己此处,还是用Peek估计更可靠。

代码改为:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 | // valid charset:"GB18030"/"UTF-8", invliad:"UTF8"public string getUrlRespHtml(string url, Dictionary<string, string> headerDict, string charset, Dictionary<string, string> postDict, int timeout, string postDataStr){ string respHtml = ""; //HttpWebResponse resp = getUrlResponse(url, headerDict, postDict, timeout); HttpWebResponse resp = getUrlResponse(url, headerDict, postDict, timeout, postDataStr); //long realRespLen = resp.ContentLength; if (resp != null) { StreamReader sr; Stream respStream = resp.GetResponseStream(); if ((charset != null) && (charset != "")) { Encoding htmlEncoding = Encoding.GetEncoding(charset); sr = new StreamReader(respStream, htmlEncoding); } else { sr = new StreamReader(respStream); } try { //respHtml = sr.ReadToEnd(); //while (!sr.EndOfStream) //{ // respHtml = respHtml + sr.ReadLine(); //} //string curLine = ""; //while ((curLine = sr.ReadLine()) != null) //{ // respHtml = respHtml + curLine; //} while (sr.Peek() > -1) //while not error or not reach end of stream { respHtml = respHtml + sr.ReadLine(); } respStream.Close(); sr.Close(); resp.Close(); } catch (Exception ex) { //【未解决】C#中StreamReader中遇到异常:未处理ObjectDisposedException,无法访问已关闭的流 //System.ObjectDisposedException respHtml = ""; } } return respHtml;} |

然后去测试一段时间。

希望以后,此句代码:

1 2 3 4 | while (sr.Peek() > -1) //while not error or not reach end of stream{ respHtml = respHtml + sr.ReadLine();} |

可以解决之前那个问题:

不要在出现无限期的死掉的问题。

9.结果,对于:

1 2 3 4 | while (sr.Peek() > -1) //while not error or not reach end of stream{ respHtml = respHtml + sr.ReadLine();} |

根本无法正常获得所返回的html,此处是:

对于amazon的url所返回的html,直接在获得了一些空格后,就返回-1了,后续的N多的html的内容,全部都没得到。

10.并且,刚刚发现的,即使使用上述的Peek后,再去:

1 | respHtml = respHtml + sr.ReadLine(); |

也还是会死掉的。刚刚就死掉了一次。

11.继续想办法让StreamReader支持Timeout。

后来看到:

HttpWebResponse.ReadTimeout – Timeouts not supported?

中提到了:

HttpWebRequest.ReadWriteTimeout Property

所以,貌似可以通过设置此HttpWebRequest的ReadWriteTimeout 来实现,对于其stream的read时,出现的timeout的情况。

去试试代码:

在_getUrlResponse中添加对应的ReadWriteTimeout支持:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | /* get url's response * */public HttpWebResponse _getUrlResponse(string url, Dictionary<string, string> headerDict, Dictionary<string, string> postDict, int timeout, string postDataStr, int readWriteTimeout = 30*1000){ ...... HttpWebRequest req = (HttpWebRequest)WebRequest.Create(url); ...... if (readWriteTimeout > 0) { req.ReadWriteTimeout = readWriteTimeout; } |

然后再去测试测试此代码,看看能否解决:

无限期的挂掉的问题。

同时,不用上述的Peek,改用原先的ReadToEnd:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | respHtml = sr.ReadToEnd();//while (!sr.EndOfStream)//{// respHtml = respHtml + sr.ReadLine();//}//string curLine = "";//while ((curLine = sr.ReadLine()) != null)//{// respHtml = respHtml + curLine;//}//while (sr.Peek() > -1) //while not error or not reach end of stream//{// respHtml = respHtml + sr.ReadLine();//} |

然后调试期间发现,默认的HttpWebRequest的ReadWriteTimeout的值是300000=300*1000=300秒=5分钟

真够长的。。。

此处,改为30秒=30*1000=30000了。

然后看看结果如何。

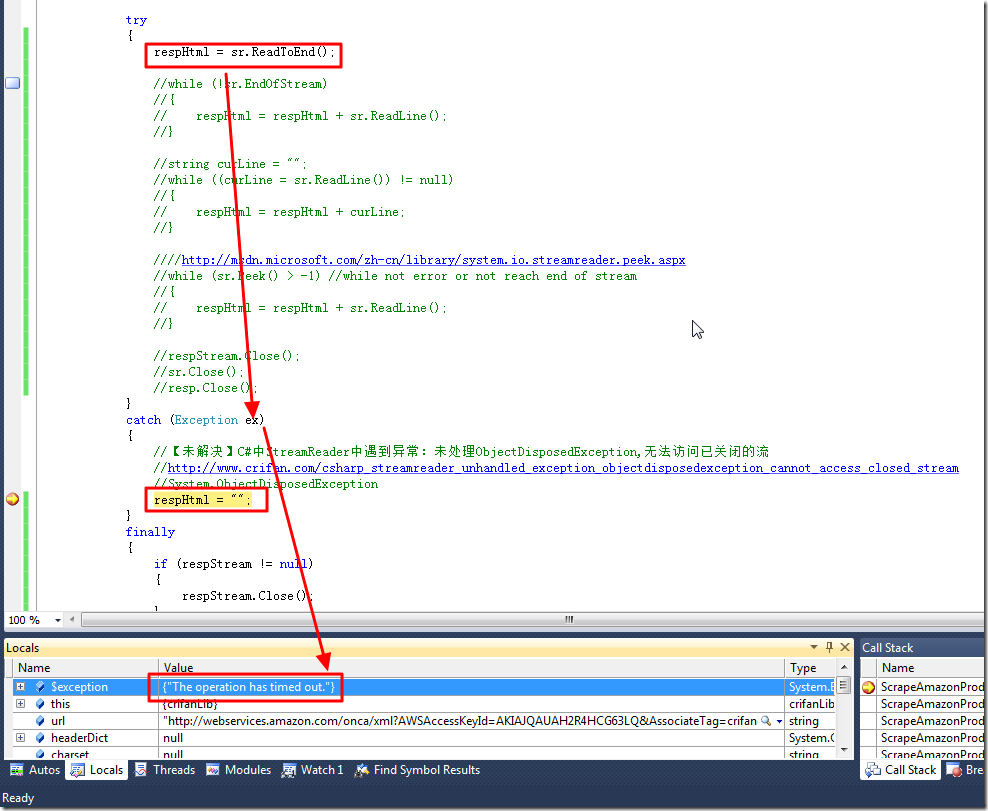

12.后来的一次调试中,发现的确是其效果了。

当死在:

1 | respHtml = sr.ReadToEnd(); |

时,大概的确是30秒后,然后超时,代码跳转到对应的:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | try{ respHtml = sr.ReadToEnd(); //while (!sr.EndOfStream) //{ // respHtml = respHtml + sr.ReadLine(); //} //string curLine = ""; //while ((curLine = sr.ReadLine()) != null) //{ // respHtml = respHtml + curLine; //} //while (sr.Peek() > -1) //while not error or not reach end of stream //{ // respHtml = respHtml + sr.ReadLine(); //} //respStream.Close(); //sr.Close(); //resp.Close();}catch (Exception ex){ //【未解决】C#中StreamReader中遇到异常:未处理ObjectDisposedException,无法访问已关闭的流 //System.ObjectDisposedException respHtml = "";} |

中的

1 | respHtml = ""; |

这句了。

所以,就可以实现所要的效果了:

当死在ReadToEnd的时候,支持了Timeout,超过一定的时间,则出现timeout异常:

然后可以继续按照自己的逻辑处理。

【总结】

目前,貌似,已经实现了所需要的效果了,即:

对于问题:

GetResponseStream之后,所得到的Stream,通过StreamReader去读取,用ReadToEnd或ReadLine,有时候会无限期的挂掉;

所以,希望:

StreamReader的ReadToEnd或ReadLine,支持Timeout

以达到:

不会无限期的挂掉,而是超时后,就终止

这样代码中就可以通过多次获取html,而最终避开此单次的获得html有误,而达到程序正常运行的目的了。

具体实现方式是:

在发送web请求之前,对于HttpWebRequest,就去设置对应的参数ReadWriteTimeout

其默认值是300000=300 seconds = 5 minutes

此处,我改为300000 = 30 seconds

然后,后续在正常的GetResponse后,再去获得GetResponseStream而得到Stream,

对于Stream,去通过StreamReader读取,使用ReadToEnd或ReadLine都可以。

此时,对于ReadToEnd或ReadLine,就已经是官网中:

HttpWebRequest.ReadWriteTimeout Property

中所提到的:

| Specifically, the ReadWriteTimeout property controls the time-out for the Read method, which is used to read the stream returned by the GetResponseStream method, and for the Write method, which is used to write to the stream returned by the GetRequestStream method. |

即,对于通过GetResponseStream而返回的stream,read就支持timeout了。

所以,当我此处,真的在:

ReadToEnd或ReadLine

地方,(无限期延迟)挂掉的时候,自然会有出现对应的Timeout的Exception,就可以调到我上面的

catch (Exception ex)

部分的代码了。

就可以避免之前的ReadToEnd或ReadLine的无限期挂掉的问题了。

然后,另外,写了个getUrlRespHtml_multiTry,当一次获取html失败时,多试几次,就可以使得程序正常执行,可以正常获得html了。

最终,相关部分的,完整的代码,如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 | //detault values: //getUrlResponse private const Dictionary<string, string> defHeaderDict = null; private const Dictionary<string, string> defPostDict = null; private const int defTimeout = 30 * 1000; private const string defPostDataStr = null; private const int defReadWriteTimeout = 30 * 1000; //getUrlRespHtml private const string defCharset = null; //getUrlRespHtml_multiTry private const int defMaxTryNum = 5; private const int defRetryFailSleepTime = 100; //sleep time in ms when retry fail for getUrlRespHtml /* get url's response * */ public HttpWebResponse _getUrlResponse(string url, Dictionary<string, string> headerDict = defHeaderDict, Dictionary<string, string> postDict = defPostDict, int timeout = defTimeout, string postDataStr = defPostDataStr, int readWriteTimeout = defReadWriteTimeout) { //CookieCollection parsedCookies; HttpWebResponse resp = null; HttpWebRequest req = (HttpWebRequest)WebRequest.Create(url); req.AllowAutoRedirect = true; req.Accept = "*/*"; //req.ContentType = "text/plain"; //const string gAcceptLanguage = "en-US"; // zh-CN/en-US //req.Headers["Accept-Language"] = gAcceptLanguage; req.KeepAlive = true; req.UserAgent = gUserAgent; req.Headers["Accept-Encoding"] = "gzip, deflate"; req.AutomaticDecompression = DecompressionMethods.GZip; req.Proxy = null; if (timeout > 0) { req.Timeout = timeout; } if (readWriteTimeout > 0) { //default ReadWriteTimeout is 300000=300 seconds = 5 minutes !!! //too long, so here change to 300000 = 30 seconds //for support TimeOut for later StreamReader's ReadToEnd req.ReadWriteTimeout = readWriteTimeout; } if (curCookies != null) { req.CookieContainer = new CookieContainer(); req.CookieContainer.PerDomainCapacity = 40; // following will exceed max default 20 cookie per domain req.CookieContainer.Add(curCookies); } if ((headerDict != null) && (headerDict.Count > 0)) { foreach (string header in headerDict.Keys) { string headerValue = ""; if (headerDict.TryGetValue(header, out headerValue)) { // following are allow the caller overwrite the default header setting if (header.ToLower() == "referer") { req.Referer = headerValue; } else if (header.ToLower() == "allowautoredirect") { bool isAllow = false; if (bool.TryParse(headerValue, out isAllow)) { req.AllowAutoRedirect = isAllow; } } else if (header.ToLower() == "accept") { req.Accept = headerValue; } else if (header.ToLower() == "keepalive") { bool isKeepAlive = false; if (bool.TryParse(headerValue, out isKeepAlive)) { req.KeepAlive = isKeepAlive; } } else if (header.ToLower() == "accept-language") { req.Headers["Accept-Language"] = headerValue; } else if (header.ToLower() == "useragent") { req.UserAgent = headerValue; } else if (header.ToLower() == "content-type") { req.ContentType = headerValue; } else { req.Headers[header] = headerValue; } } else { break; } } } if (((postDict != null) && (postDict.Count > 0)) || (!string.IsNullOrEmpty(postDataStr))) { req.Method = "POST"; if (req.ContentType == null) { req.ContentType = "application/x-www-form-urlencoded"; } if ((postDict != null) && (postDict.Count > 0)) { postDataStr = quoteParas(postDict); } //byte[] postBytes = Encoding.GetEncoding("utf-8").GetBytes(postData); byte[] postBytes = Encoding.UTF8.GetBytes(postDataStr); req.ContentLength = postBytes.Length; Stream postDataStream = req.GetRequestStream(); postDataStream.Write(postBytes, 0, postBytes.Length); postDataStream.Close(); } else { req.Method = "GET"; } //may timeout, has fixed in: try { resp = (HttpWebResponse)req.GetResponse(); updateLocalCookies(resp.Cookies, ref curCookies); } catch (WebException webEx) { if (webEx.Status == WebExceptionStatus.Timeout) { resp = null; } } return resp; }#if USE_GETURLRESPONSE_BW private void getUrlResponse_bw(string url, Dictionary<string, string> headerDict = defHeaderDict, Dictionary<string, string> postDict = defPostDict, int timeout = defTimeout, string postDataStr = defPostDataStr, int readWriteTimeout = defReadWriteTimeout) { // Create a background thread BackgroundWorker bgwGetUrlResp = new BackgroundWorker(); bgwGetUrlResp.DoWork += new DoWorkEventHandler(bgwGetUrlResp_DoWork); bgwGetUrlResp.RunWorkerCompleted += new RunWorkerCompletedEventHandler( bgwGetUrlResp_RunWorkerCompleted ); //init bNotCompleted_resp = true; // run in another thread object paraObj = new object[] { url, headerDict, postDict, timeout, postDataStr, readWriteTimeout }; bgwGetUrlResp.RunWorkerAsync(paraObj); } private void bgwGetUrlResp_DoWork(object sender, DoWorkEventArgs e) { object[] paraObj = (object[])e.Argument; string url = (string)paraObj[0]; Dictionary<string, string> headerDict = (Dictionary<string, string>)paraObj[1]; Dictionary<string, string> postDict = (Dictionary<string, string>)paraObj[2]; int timeout = (int)paraObj[3]; string postDataStr = (string)paraObj[4]; int readWriteTimeout = (int)paraObj[5]; e.Result = _getUrlResponse(url, headerDict, postDict, timeout, postDataStr, readWriteTimeout); } //void m_bgWorker_ProgressChanged(object sender, ProgressChangedEventArgs e) //{ // bRespNotCompleted = true; //} private void bgwGetUrlResp_RunWorkerCompleted(object sender, RunWorkerCompletedEventArgs e) { // The background process is complete. We need to inspect // our response to see if an error occurred, a cancel was // requested or if we completed successfully. // Check to see if an error occurred in the // background process. if (e.Error != null) { //MessageBox.Show(e.Error.Message); return; } // Check to see if the background process was cancelled. if (e.Cancelled) { //MessageBox.Show("Cancelled ..."); } else { bNotCompleted_resp = false; // Everything completed normally. // process the response using e.Result //MessageBox.Show("Completed..."); gCurResp = (HttpWebResponse)e.Result; } }#endif /* get url's response * */ public HttpWebResponse getUrlResponse(string url, Dictionary<string, string> headerDict = defHeaderDict, Dictionary<string, string> postDict = defPostDict, int timeout = defTimeout, string postDataStr = defPostDataStr, int readWriteTimeout = defReadWriteTimeout) {#if USE_GETURLRESPONSE_BW HttpWebResponse localCurResp = null; getUrlResponse_bw(url, headerDict, postDict, timeout, postDataStr, readWriteTimeout); while (bNotCompleted_resp) { System.Windows.Forms.Application.DoEvents(); } localCurResp = gCurResp; //clear gCurResp = null; return localCurResp;#else return _getUrlResponse(url, headerDict, postDict, timeout, postDataStr);;#endif } // valid charset:"GB18030"/"UTF-8", invliad:"UTF8" public string getUrlRespHtml(string url, Dictionary<string, string> headerDict = defHeaderDict, string charset = defCharset, Dictionary<string, string> postDict = defPostDict, int timeout = defTimeout, string postDataStr = defPostDataStr, int readWriteTimeout = defReadWriteTimeout) { string respHtml = ""; HttpWebResponse resp = getUrlResponse(url, headerDict, postDict, timeout, postDataStr, readWriteTimeout); //long realRespLen = resp.ContentLength; if (resp != null) { StreamReader sr; Stream respStream = resp.GetResponseStream(); if (!string.IsNullOrEmpty(charset)) { Encoding htmlEncoding = Encoding.GetEncoding(charset); sr = new StreamReader(respStream, htmlEncoding); } else { sr = new StreamReader(respStream); } try { respHtml = sr.ReadToEnd(); //while (!sr.EndOfStream) //{ // respHtml = respHtml + sr.ReadLine(); //} //string curLine = ""; //while ((curLine = sr.ReadLine()) != null) //{ // respHtml = respHtml + curLine; //} //while (sr.Peek() > -1) //while not error or not reach end of stream //{ // respHtml = respHtml + sr.ReadLine(); //} //respStream.Close(); //sr.Close(); //resp.Close(); } catch (Exception ex) { //【未解决】C#中StreamReader中遇到异常:未处理ObjectDisposedException,无法访问已关闭的流 //System.ObjectDisposedException respHtml = ""; } finally { if (respStream != null) { respStream.Close(); } if (sr != null) { sr.Close(); } if (resp != null) { resp.Close(); } } } return respHtml; } public string getUrlRespHtml_multiTry (string url, Dictionary<string, string> headerDict = defHeaderDict, string charset = defCharset, Dictionary<string, string> postDict = defPostDict, int timeout = defTimeout, string postDataStr = defPostDataStr, int readWriteTimeout = defReadWriteTimeout, int maxTryNum = defMaxTryNum, int retryFailSleepTime = defRetryFailSleepTime) { string respHtml = ""; for (int tryIdx = 0; tryIdx < maxTryNum; tryIdx++) { respHtml = getUrlRespHtml(url, headerDict, charset, postDict, timeout, postDataStr, readWriteTimeout); if (!string.IsNullOrEmpty(respHtml)) { break; } else { //something wrong //maybe network is not stable //so wait some time, then re-do it System.Threading.Thread.Sleep(retryFailSleepTime); } } return respHtml; } |

另外,关于全部代码的,都在这里:

http://code.google.com/p/crifanlib/source/browse/trunk/csharp/crifanLib.cs

转载请注明:在路上 » 【已解决】C#中在GetResponseStream得到的Stream后,通过StreamReader去ReadLine或ReadToEnd会无限期挂掉 + 给StreamReader添加Timeout支持