BCH code

From Wikipedia, the free encyclopedia

In coding theory the BCH codes form a class of parameterised error-correcting codes which have been the subject of much academic attention in the last fifty years. BCH codes were invented in 1959 by Hocquenghem, and independently in 1960 by Bose and Ray-Chaudhuri[1]. The acronym BCH comprises the initials of these inventors’ names.

The principal advantage of BCH codes is the ease with which they can be decoded, via an elegant algebraic method known as syndrome decoding. This allows very simple electronic hardware to perform the task, obviating the need for a computer, and meaning that a decoding device may be made small and low-powered. As a class of codes, they are also highly flexible, allowing control over block length and acceptable error thresholds, meaning that a custom code can be designed to a given specification (subject to mathematical constraints).

In technical terms a BCH code is a multilevel, cyclic, error-correcting, variable-length digital code used to correct multiple random error patterns. BCH codes may also be used with multilevel phase-shift keying whenever the number of levels is a prime number or a power of a prime number. A BCH code in 11 levels has been used to represent the 10 decimal digits plus a sign digit.[2]

Contents[hide] |

[edit] Construction

A BCH code is a polynomial code over a finite field with a particularly chosen generator polynomial. It is also a cyclic code as well.

[edit] Simplified BCH codes

For ease of exposition, we first describe a special class of BCH codes. General BCH codes are described in the next section.

Definition. Fix a finite field GF(q), where q is a prime power. Also fix positive integers m, n, and d such that n = qm − 1 and  . We will construct a polynomial code over GF(q) with code length n, whose minimum Hamming distance is at least d. What remains to be specified is the generator polynomial of this code.

. We will construct a polynomial code over GF(q) with code length n, whose minimum Hamming distance is at least d. What remains to be specified is the generator polynomial of this code.

Let α be a primitive nth root of unity in GF(qm). For all i, let mi(x) be the minimal polynomial of αi with coefficients in GF(q). The generator polynomial of the BCH code is defined as the least common multiple  .

.

[edit] Example

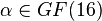

Let q = 2 and m = 4 (therefore n = 15). We will consider different values of d. There is a primitive root  satisfying

satisfying

- α4 + α + 1 = 0 (1);

its minimal polynomial over GF(2) is :m1(x) = x4 + x + 1. Note that in GF(2), the equation (a + b)2 = a2 + 2ab + b2 = a2 + b2 holds, and therefore m1(α2) = m1(α)2 = 0. Thus α2 is a root of m1(x), and therefore

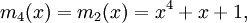

- m2(x) = m1(x) = x4 + x + 1.

To compute m3(x), notice that, by repeated application of (1), we have the following linear relations:

- 1 = 0α3 + 0α2 + 0α + 1

- α3 = 1α3 + 0α2 + 0α + 0

- α6 = 1α3 + 1α2 + 0α + 0

- α9 = 1α3 + 0α2 + 1α + 0

- α12 = 1α3 + 1α2 + 1α + 1

Five right-hand-sides of length four must be linearly dependent, and indeed we find a linear dependency α12 + α9 + α6 + α3 + 1 = 0. Since there is no smaller degree dependency, the minimal polynomial of α3 is :m3(x) = x4 + x3 + x2 + x + 1. Continuing in a similar manner, we find

The BCH code with d = 1,2,3 has generator polynomial

It has minimal Hamming distance at least 3 and corrects up to 1 error. Since the generator polynomial is of degree 4, this code has 11 data bits and 4 checksum bits.

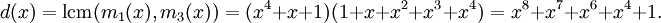

The BCH code with d = 4,5 has generator polynomial

It has minimal Hamming distance at least 5 and corrects up to 2 errors. Since the generator polynomial is of degree 8, this code has 7 data bits and 8 checksum bits.

The BCH code with d = 6,7 has generator polynomial

It has minimal Hamming distance at least 7 and corrects up to 3 errors. This code has 5 data bits and 10 checksum bits.

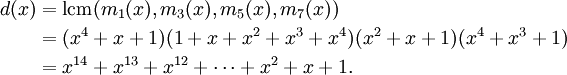

The BCH code with d = 8 and higher have generator polynomial

This code has minimal Hamming distance 15 and corrects 7 errors. It has 1 data bit and 14 checksum bits. In fact, this code only has two codewords 000000000000000 and 111111111111111.

[edit] General BCH codes

General BCH codes differ from the simplified case discussed above in two respects. First, one replaces the requirement n = qm − 1 by a more general condition. Second, the consecutive roots of the generator polynomial may run from  instead of

instead of  .

.

Definition. Fix a finite field GF(q), where q is a prime power. Chose positive integers m,n,d,c such that  , gcd(n,q) = 1, and m is the multiplicative order of q modulo n.

, gcd(n,q) = 1, and m is the multiplicative order of q modulo n.

As before, let α be a primitive nth root of unity in GF(qm), and let mi(x) be the minimal polynomial over GF(q) of αi for all i. The generator polynomial of the BCH code is defined as the least common multiple  .

.

Note: if n = qm − 1 as in the simplified definition, then gcd(n,q) is automatically 1, and the order of q m modulo n is automatically m. Therefore, the simplified definition is indeed a special case of the general one.

[edit] Properties

Property. The generator polynomial of a BCH code has degree at most (d − 1)m. Moreover, if q = 2 and c = 1, the generator polynomial has degree at most dm / 2.

Proof: each minimal polynomial mi(x) has degree at most m. Therefore, the least common multiple of d − 1 of them has degree at most (d − 1)m. Moreover, if q = 2, then mi(x) = m2i(x) for all i. Therefore, g(x) is the least common multiple of at most d / 2 minimal polynomials mi(x) for odd indices i, each of degree at most m.

Property. A BCH code has minimal Hamming distance at least d.

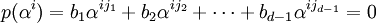

Proof: We only give the proof in the simplified case; the general case is similar. Suppose that p(x) is a code word with fewer than d non-zero terms. Then

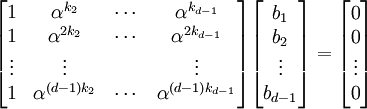

Recall that  are roots of g(x), hence of p(x). This implies that

are roots of g(x), hence of p(x). This implies that  satisfy the following equations, for

satisfy the following equations, for  :

:

.

.

Dividing this by  , and writing kl = jl − j1, we get

, and writing kl = jl − j1, we get

for all i, or equivalently

This matrix is seen to be a Vandermonde matrix, and its determinant is

,

,

which is non-zero. It therefore follows that  , hence p(x) = 0.

, hence p(x) = 0.

Property. A BCH code is cyclic.

Proof: A polynomial code of length n is cyclic if and only if its generator polynomial divides xn − 1. Since g(x) is the minimal polynomial with roots  , it suffices to check that each of

, it suffices to check that each of  is a root of xn − 1. This follows immediately from the fact that α is, by definition, an nth root of unity.

is a root of xn − 1. This follows immediately from the fact that α is, by definition, an nth root of unity.

[edit] Special cases

- A BCH code with c = 1 is called a narrow-sense BCH code.

- A BCH code with n = qm − 1 is called primitive.

Therefore, the "simplified" BCH codes we considered above were just the primitive narrow-sense codes.

- A narrow-sense BCH code with n = q − 1 is called a Reed-Solomon code.

[edit] Decoding

BCH decoding is split into the following four steps

- Calculate the 2t syndrome values, for the received vector R

- Calculate the error locator polynomials

- Calculate the roots of this polynomial, to get error location positions.

- If non-binary BCH, Calculate the error values at these error locations.

The following steps are illustrated below. Suppose we receive a codeword vector r (the polynomial R(x)).

If there is no error R(α) = R(α3) = 0

If there is one error (i.e. r = c + ei where ei represents the ith basis vector for  .

.

So then

- S1 = R(α) = C(α) + αi = αi

so we can recognize one error. A change in the bit position shown by α‘s power will aid us correct that error.

If there are two errors

- r = c + ei + ek

then

- S1 = R(α) = C(α) + αi + αk

- S3 = R(α3) = C(α3) + (α3)i + (α3)k = (α3)i + (α3)k

which is not the same as  so we can recognize two errors. Further algebra can aid us in correcting these two errors.

so we can recognize two errors. Further algebra can aid us in correcting these two errors.

Original source (first two paragraphs) from Federal Standard 1037C

The above text has been taken from: http://bch-code.foosquare.com/

[edit] BCH decoding algorithms

Popular decoding algorithms are,

- Peterson Gorenstein Zierler algorithm

- Berlekamp-Massey algorithm

[edit] Peterson Gorenstein Zierler algorithm

[edit] Assumptions

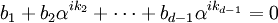

Peterson’s algorithm, is the step 2, of the generalized BCH decoding procedure. We use Peterson’s algorithm, to calculate the error locator polynomial coefficients  of a polynomial

of a polynomial

Now the procedure of the Peterson Gorenstein Zierler algorithm, for a given (n,k,dmin) BCH code designed to correct ![[t=frac{d_{min}-1}{2}]](https://upload.wikimedia.org/math/9/6/8/968c0f326ec80d247529025b3519fa2a.png) errors, is

errors, is

[edit] Algorithm

- First generate the Matrix of 2t syndromes,

- Next generate the

matrix with the elements, syndrome values,

matrix with the elements, syndrome values,

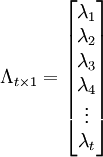

- Generate a ctx1 matrix with, elements,

- Let Λ denote the unknown polynomial coefficients, which are given,using

- Form the matrix equation

- If the determinant of matrix

exists, then we can actually, find an inverse of this matrix, and solve for the values of unknown Λ values.

exists, then we can actually, find an inverse of this matrix, and solve for the values of unknown Λ values.

- If

, then follow

, then follow

if <span class="texhtml"><em>t</em> = 0</span>

then

declare an empty error locator polynomial

stop Peterson procedure.

end

set <img class="tex" alt="t leftarrow t -1" src="http://upload.wikimedia.org/math/0/3/5/035d13347fad3d7b0014c5da2503aaf7.png" />

continue from the beginning of Peterson's decoding- After you have values of Λ you have with you the error locator polynomial.

- Stop Peterson procedure.

[edit] Factoring error locator polynomial

Now that you have Λ(x) polynomial, you can find its roots in the form  using, the Chien search algorithm. The exponential powers of the primitive element α, will yield the positions where errors occur in the received word; hence the name ‘error locator’ polynomial.

using, the Chien search algorithm. The exponential powers of the primitive element α, will yield the positions where errors occur in the received word; hence the name ‘error locator’ polynomial.

[edit] Correcting errors

For the case of binary BCH, you can directly correct the received vectors, at the positions of the powers of primitive elements, of the error locator polynomial factors. Finally, just flip the bits for the received word, at these positions, and we have the corrected code word, from BCH decoding.

We may also use Berlekamp-Massey algorithm for determining the error locator polynomial, and hence solve the BCH decoding problem.

[edit] Simulation results

The simulation results for a AWGN BPSK system using a (63,36,5) BCH code are shown in this figure. A coding gain of almost 2 dB is observed at a bit error rate 10 − 3.

[edit] Citations

- ^ Page 189, Reed, Irving, S.. Error-Control Coding for Data Networks. Kiuwer Academic Publishers. ISBN 0-7923-8528-4.

- ^ Federal Standard 1037C, 1996.

[edit] References

- S. Lin and D. Costello. Error Control Coding: Fundamentals and Applications. Prentice-Hall, Englewood Cliffs, NJ, 2004.

- Galois Field Calculator: http://www.geocities.com/myopic_stargazer/gf_calc.zip

- W.J. Gilbert and W.K. Nicholson. Modern Algebra with Applications, 2nd edition. Wiley, 2004.

- R. Lidl and G. Pilz. Applied Abstract Algebra, 2nd edition. Wiley, 1999.