折腾:

【记录】用Python的Scrapy去爬取cbeebies.com

期间,继续参考:

去试试

Scrapy终端(Scrapy shell) — Scrapy 1.0.5 文档

的效果。

➜ cbeebies scrapy shell “http://global.cbeebies.com/shows/“

2018-01-09 22:13:03 [scrapy.utils.log] INFO: Scrapy 1.4.0 started (bot: cbeebies)

2018-01-09 22:13:03 [scrapy.utils.log] INFO: Overridden settings: {‘NEWSPIDER_MODULE’: ‘cbeebies.spiders’, ‘ROBOTSTXT_OBEY’: True, ‘DUPEFILTER_CLASS’: ‘scrapy.dupefilters.BaseDupeFilter’, ‘SPIDER_MODULES’: [‘cbeebies.spiders’], ‘BOT_NAME’: ‘cbeebies’, ‘LOGSTATS_INTERVAL’: 0}

2018-01-09 22:13:03 [scrapy.middleware] INFO: Enabled extensions:

[‘scrapy.extensions.memusage.MemoryUsage’,

‘scrapy.extensions.telnet.TelnetConsole’,

‘scrapy.extensions.corestats.CoreStats’]

2018-01-09 22:13:03 [scrapy.middleware] INFO: Enabled downloader middlewares:

[‘scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware’,

‘scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware’,

‘scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware’,

‘scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware’,

‘scrapy.downloadermiddlewares.useragent.UserAgentMiddleware’,

‘scrapy.downloadermiddlewares.retry.RetryMiddleware’,

‘scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware’,

‘scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware’,

‘scrapy.downloadermiddlewares.redirect.RedirectMiddleware’,

‘scrapy.downloadermiddlewares.cookies.CookiesMiddleware’,

‘scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware’,

‘scrapy.downloadermiddlewares.stats.DownloaderStats’]

2018-01-09 22:13:03 [scrapy.middleware] INFO: Enabled spider middlewares:

[‘scrapy.spidermiddlewares.httperror.HttpErrorMiddleware’,

‘scrapy.spidermiddlewares.offsite.OffsiteMiddleware’,

‘scrapy.spidermiddlewares.referer.RefererMiddleware’,

‘scrapy.spidermiddlewares.urllength.UrlLengthMiddleware’,

‘scrapy.spidermiddlewares.depth.DepthMiddleware’]

2018-01-09 22:13:03 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-01-09 22:13:03 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-01-09 22:13:03 [scrapy.core.engine] INFO: Spider opened

2018-01-09 22:13:05 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://global.cbeebies.com/robots.txt> (referer: None)

2018-01-09 22:13:05 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://global.cbeebies.com/shows/> (referer: None)

[s] Available Scrapy objects:

[s] scrapy scrapy module (contains scrapy.Request, scrapy.Selector, etc)

[s] crawler <scrapy.crawler.Crawler object at 0x104ce3ad0>

[s] item {}

[s] request <GET http://global.cbeebies.com/shows/>

[s] response <200 http://global.cbeebies.com/shows/>

[s] settings <scrapy.settings.Settings object at 0x104ce3a50>

[s] spider <CbeebiesSpider ‘Cbeebies’ at 0x104fb3810>

[s] Useful shortcuts:

[s] fetch(url[, redirect=True]) Fetch URL and update local objects (by default, redirects are followed)

[s] fetch(req) Fetch a scrapy.Request and update local objects

[s] shelp() Shell help (print this help)

[s] view(response) View response in a browser

>>>

然后试试效果:

>>> response.body

‘<!DOCTYPE html>\n<!–[if lt IE 7]><html class=”no-js lteie9 lteie8 ie6 oldie children-shows lang-en-gb” lang=”en-gb”><![endif]–><!–[if IE 7]><html class=”no-js lteie9 lteie8 ie7 oldie children-shows lang-en-gb” lang=”en-gb”><![endif]–><!–[if IE 8]><html class=”no-js lteie9 lteie8 ie8 oldie children-shows lang-e

…

t type=”text/javascript”>\n\t\t\t\t(function (d, t) {\n\t\t\t\t var bh = d.createElement(t), s = d.getElementsByTagName(t)[0];\n\t\t\t\t bh.type = \’text/javascript\’;\n\t\t\t\t bh.src = \’https://www.bugherd.com/sidebarv2.js?apikey=jx9eolgv4a9ijztmgfxq0q\’;\n\t\t\t\t s.parentNode.insertBefore(bh, s);\n\t\t\t\t })(document, \’script\’);\n\t\t\t\t</script></body></html>\n’

>>> response.headers

{‘X-Powered-By’: [‘PHP/5.6.32’], ‘Expires’: [‘Tue, 09 Jan 2018 14:13:01 GMT’], ‘Vary’: [‘Accept-Encoding’], ‘Server’: [‘Apache/2.4.27 (Amazon) PHP/5.6.32’], ‘Last-Modified’: [‘Tue, 09 Jan 2018 12:35:18 GMT’], ‘Pragma’: [‘no-cache’], ‘Date’: [‘Tue, 09 Jan 2018 14:13:01 GMT’], ‘Content-Type’: [‘text/html;charset=utf-8’]}

>>> response.xpath(“//title”)

[<Selector xpath=’//title’ data=u'<title> | CBeebies Global</title>’>, <Selector xpath=’//title’ data=u'<title>show-icon-template</title>’>, <Selector xpath=’//title’ data=u'<title>show-icon-template</title>’>, <Selector xpath=’//title’ data=u'<title>show-icon-template</title>’>, <Selector xpath=’//title’ data=u'<title>show-icon-template</title>’>, <Selector xpath=’//title’ data=u'<title>show-icon-template</title>’>, <Selector xpath=’//title’ data=u'<title>show-icon-template</title>’>, <Selector xpath=’//title’ data=u'<title>show-icon-template</title>’>, <Selector xpath=’//title’ data=u'<title>show-icon-template</title>’>]

>>> response.xpath(“//title”).text()

Traceback (most recent call last):

File “<console>”, line 1, in <module>

AttributeError: ‘SelectorList’ object has no attribute ‘text’

>>> response.xpath(“//title”).extract()

[u'<title> | CBeebies Global</title>’, u'<title>show-icon-template</title>’, u'<title>show-icon-template</title>’, u'<title>show-icon-template</title>’, u'<title>show-icon-template</title>’, u'<title>show-icon-template</title>’, u'<title>show-icon-template</title>’, u'<title>show-icon-template</title>’, u'<title>show-icon-template</title>’]

>>> response.xpath(“//title/text()”).extract()

[u’ | CBeebies Global’, u’show-icon-template’, u’show-icon-template’, u’show-icon-template’, u’show-icon-template’, u’show-icon-template’, u’show-icon-template’, u’show-icon-template’, u’show-icon-template’]

>>>

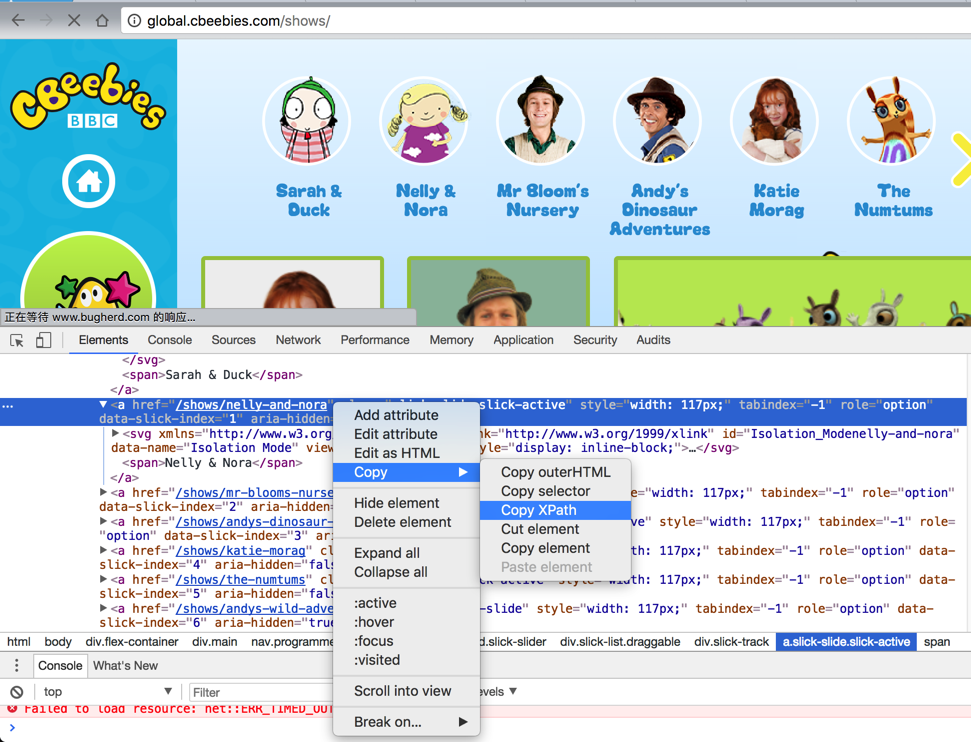

然后尝试去提取我们此处所需要的,子页面的URL

/html/body/div[2]/div[2]/nav/div/div/div/a[2]

/html/body/div[2]/div[2]/nav/div/div/div/a[3]

然后去试试:

/html/body/div[2]/div[2]/nav/div/div/div/a

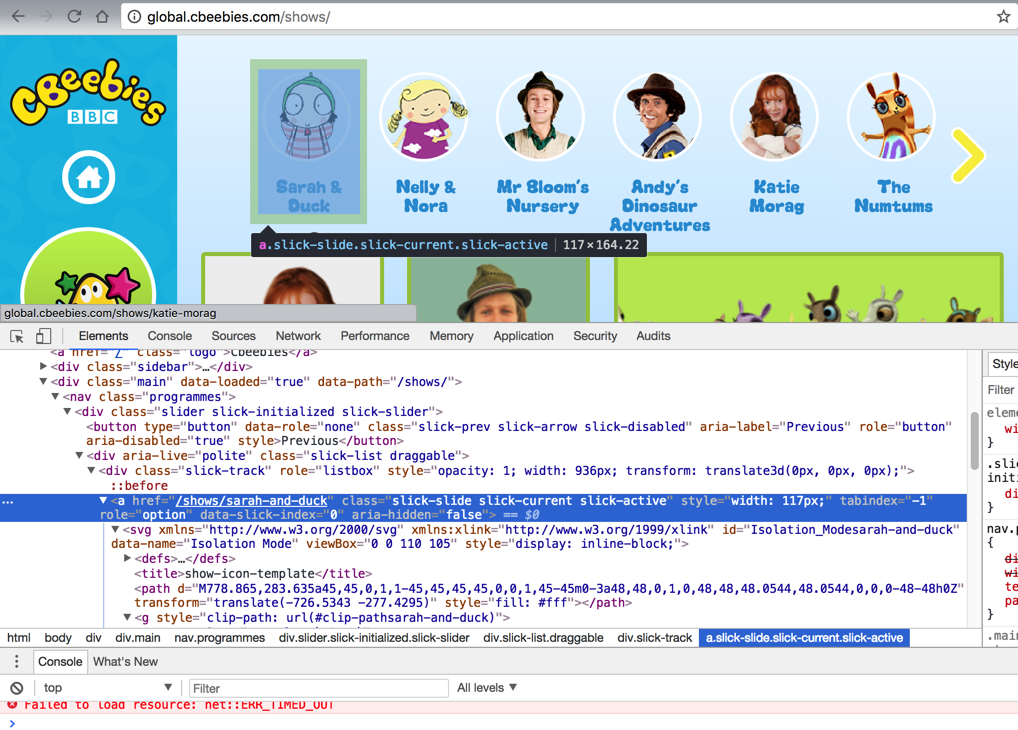

结果都是空:

>>> response.xpath(“//html/body/div[2]/div[2]/nav/div/div/div/a”)

[]

>>> response.xpath(“/html/body/div[2]/div[2]/nav/div/div/div/a”)

[]

>>> response.xpath(“/html/body/div[2]/div[2]/nav/div/div/div”)

[]

>>> response.xpath(“/html/body/div[2]/div[2]/nav”)

[<Selector xpath=’/html/body/div[2]/div[2]/nav’ data=u'<nav class=”programmes”><div class=”slid’>]

>>> response.xpath(“/html/body/div[2]/div[2]/nav/div”)

[<Selector xpath=’/html/body/div[2]/div[2]/nav/div’ data=u'<div class=”slider”><a href=”/shows/sara’>]

>>> response.xpath(“/html/body/div[2]/div[2]/nav/div/div”)

[]

所以再去根据:

去换其他xpath写法试试

结果也是空的:

>>> response.xpath(‘//div[@class=”slick-track”]’)

[]

>>> response.xpath(‘//nav[@class=”programmes”]’)

[<Selector xpath=’//nav[@class=”programmes”]’ data=u'<nav class=”programmes”><div class=”slid’>]

>>> response.xpath(‘//nav[@class=”programmes”]/div[@class=”slider slick-initialized slick-slider”]’)

[]

>>> response.xpath(‘//nav[@class=”programmes”]//div[@class=”slick-list draggable”]’)

[]

>>> response.xpath(‘//div[@aria-live=”polite”]’)

[]

>>> response.xpath(‘//div[contains(@class, “slick-list”)]’)

[]