折腾:

【记录】用Python的Scrapy去爬取cbeebies.com

后,在之前已经用过PyCharm去加上断点实时调试别的python程序的前提下。

下载希望可以用PyCharm也可以加断点,实时调试scrapy的项目。

不过能想到的是,scrapy这种项目,应该属于稍微特殊点的,毕竟可能涉及到多线程同时运行,以及在不同目录下执行scrapy还会有不同的命令(比如在项目根目录下scrapy —help,才能看到crawl子命令)

不过在用PyCharm去调试Python的Scrapy之前,先去解决:

【已解决】Mac中PyCharm中找不到已安装的Scrapy库: No module named scrapy

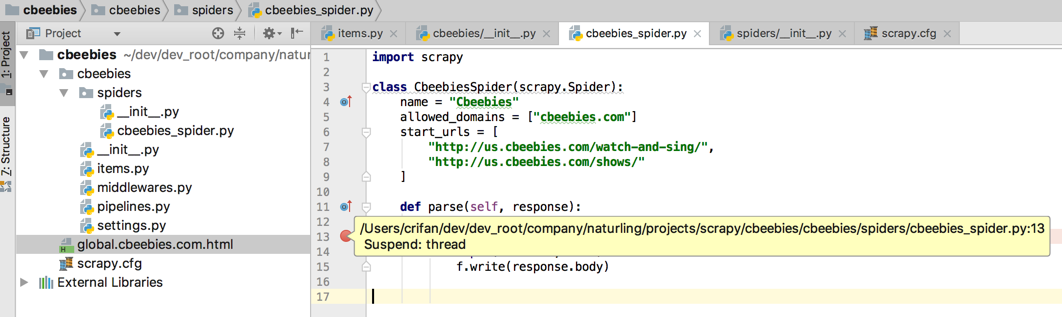

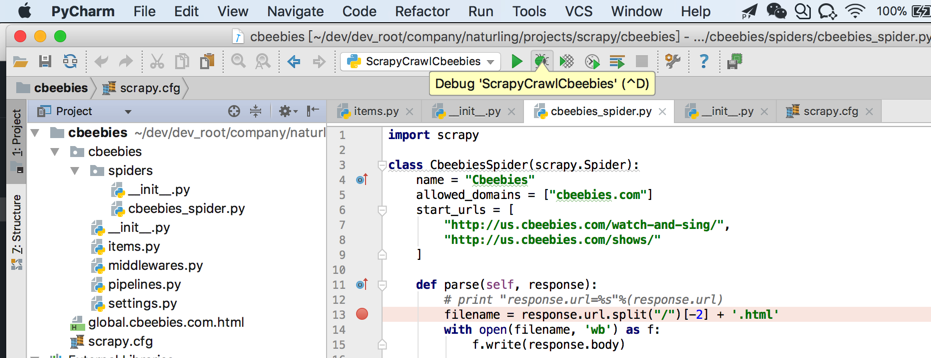

加上断点:

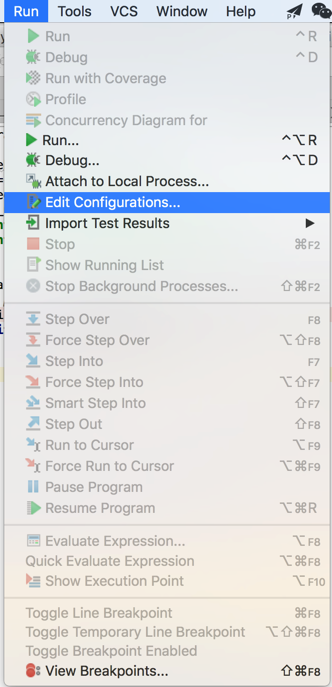

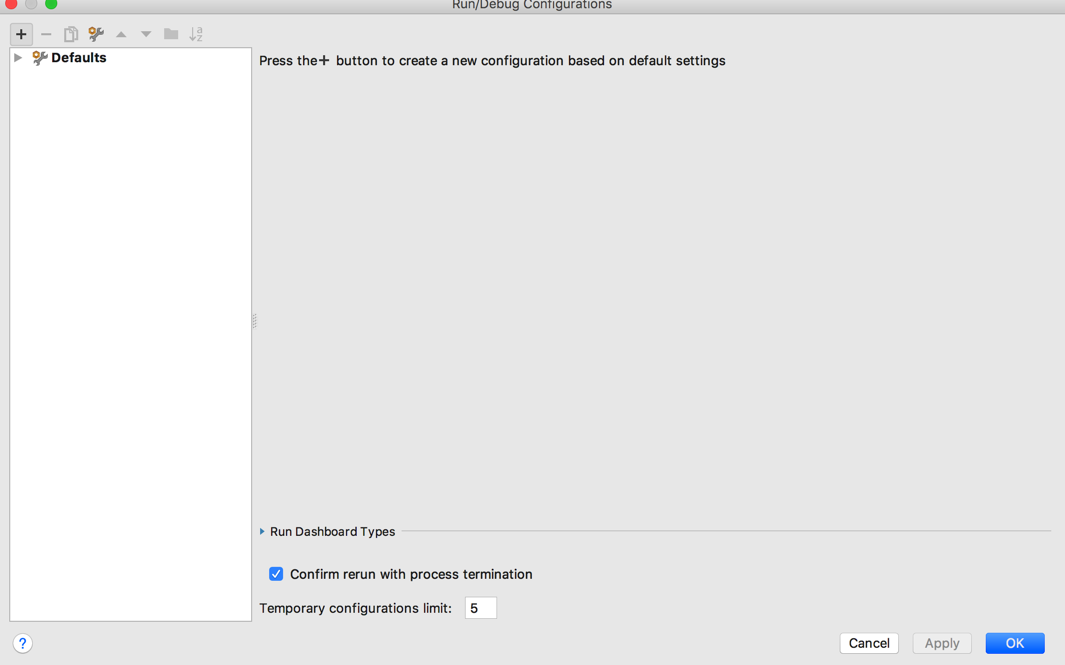

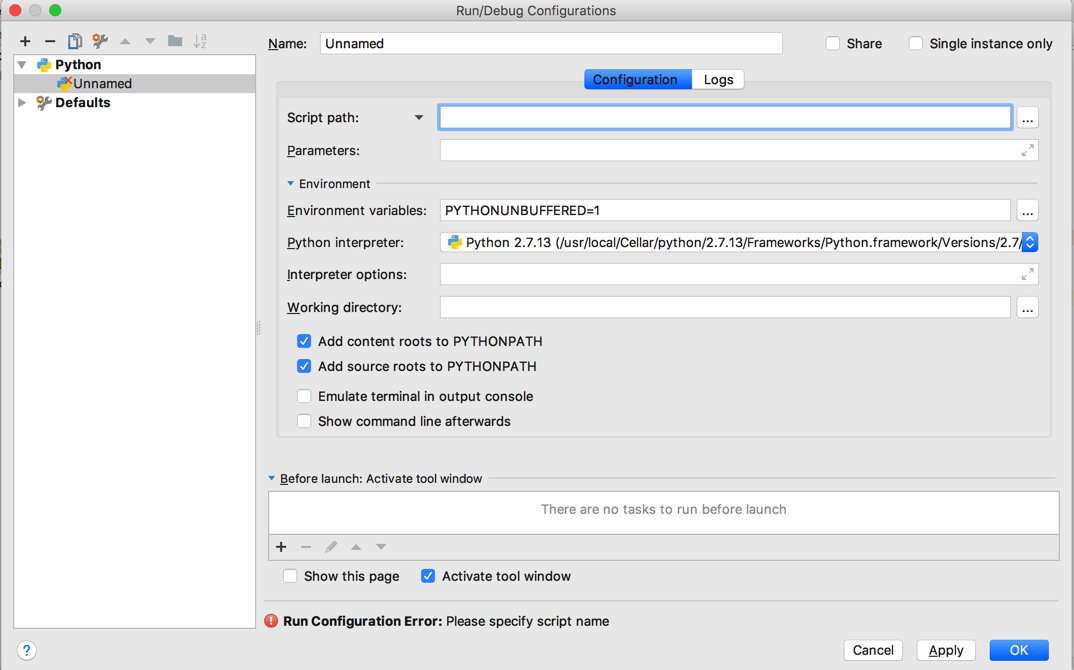

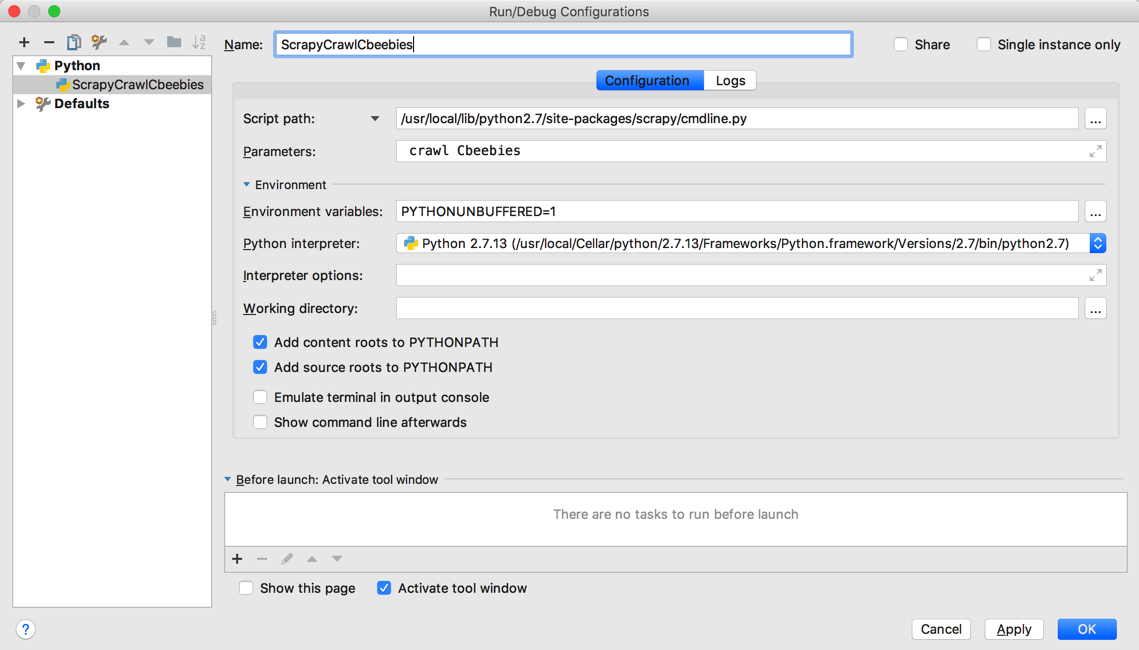

接着去设置调试配置

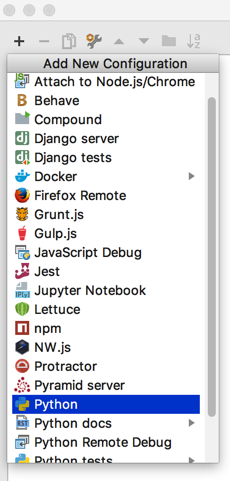

点击+

通过之前在命令行中可以正常运行的命令:

➜ cbeebies scrapy crawl Cbeebies

2018-01-09 20:29:26 [scrapy.utils.log] INFO: Scrapy 1.4.0 started (bot: cbeebies)

…

‘scheduler/enqueued/memory’: 4,

‘start_time’: datetime.datetime(2018, 1, 9, 12, 29, 27, 127494)}

2018-01-09 20:29:39 [scrapy.core.engine] INFO: Spider closed (finished)

➜ cbeebies pwd

/Users/crifan/dev/dev_root/company/naturling/projects/scrapy/cbeebies

➜ cbeebies ll

total 48

drwxr-xr-x 10 crifan staff 320B 12 26 23:07 cbeebies

-rw-r–r– 1 crifan staff 19K 1 9 20:29 global.cbeebies.com.html

-rw-r–r– 1 crifan staff 260B 12 26 22:50 scrapy.cfg

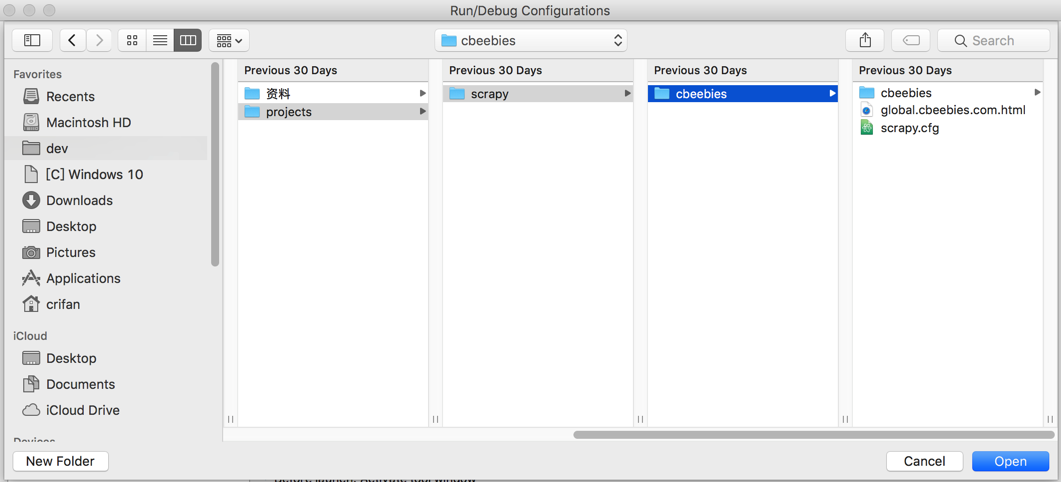

所以去设置对应的路径和命令:

结果发现选择目录后,script path还是空的:

并没有看到以为的:

script path自动变为:/Users/crifan/dev/dev_root/company/naturling/projects/scrapy/cbeebies

-》好像是必须要指定py文件的

现在变成了:

命令行下面是:

在路径:/Users/crifan/dev/dev_root/company/naturling/projects/scrapy/cbeebies

中执行:scrapy crawl Cbeebies

并不是之前的普通的可以指定某个py文件作为入口的

不知道如何运行了。

pycharm debug scrapy

Debug Scrapy? – IDEs Support (IntelliJ Platform) | JetBrains

Anyone use PyCharm for Scrapy spider development? – IDEs Support (IntelliJ Platform) | JetBrains

python – How to use PyCharm to debug Scrapy projects – Stack Overflow

scrapy其实是个py->找到对应的cmdline.py的完整路径,即可再去加上参数,运行了

➜ cbeebies which scrapy

/usr/local/bin/scrapy

➜ cbeebies ll /usr/local/bin/scrapy

-rwxr-xr-x 1 crifan admin 242B 12 26 20:49 /usr/local/bin/scrapy

➜ cbeebies cat /usr/local/bin/scrapy

#!/usr/local/opt/python/bin/python2.7

# -*- coding: utf-8 -*-

import re

import sys

from scrapy.cmdline import execute

if __name__ == ‘__main__’:

sys.argv[0] = re.sub(r'(-script\.pyw?|\.exe)?$’, ”, sys.argv[0])

sys.exit(execute())

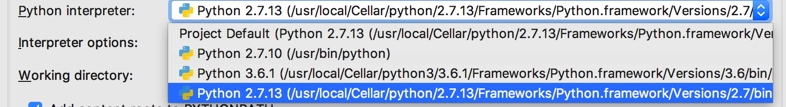

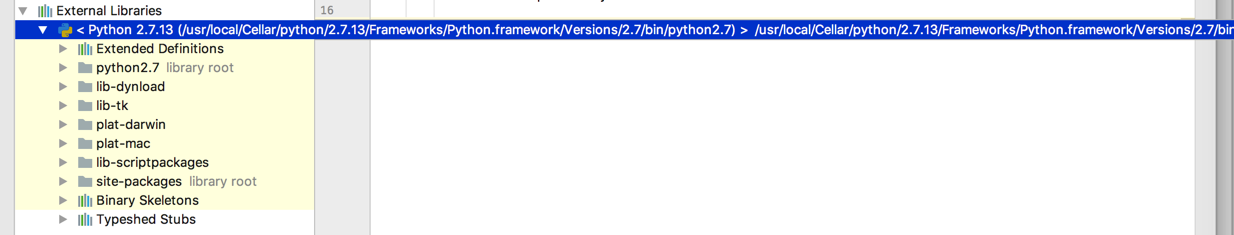

对于cmdline,是参考目前已知的Python解析器:

和:

去找到:

➜ cbeebies ll /usr/local/Cellar/python/2.7.13/Frameworks/Python.framework/Versions/2.7/bin/python2.7

-rwxr-xr-x 1 crifan admin 13K 5 6 2017 /usr/local/Cellar/python/2.7.13/Frameworks/Python.framework/Versions/2.7/bin/python2.7

…

➜ cbeebies ll /usr/local/Cellar/python/2.7.13/Frameworks/Python.framework/Versions/2.7/

total 2912

lrwxr-xr-x 1 crifan admin 17B 5 6 2017 Headers -> include/python2.7

-r-xr-xr-x 1 crifan admin 1.4M 5 6 2017 Python

drwxr-xr-x 4 crifan admin 128B 5 6 2017 Resources

drwxr-xr-x 23 crifan admin 736B 5 6 2017 bin

drwxr-xr-x 3 crifan admin 96B 5 6 2017 include

drwxr-xr-x 5 crifan admin 160B 5 6 2017 lib

➜ cbeebies ll /usr/local/Cellar/python/2.7.13/Frameworks/Python.framework/Versions/2.7/lib

total 0

lrwxr-xr-x 1 crifan admin 9B 5 6 2017 libpython2.7.dylib -> ../Python

drwxr-xr-x 5 crifan admin 160B 5 6 2017 pkgconfig

drwxr-xr-x 650 crifan admin 20K 5 6 2017 python2.7

…

➜ cbeebies ll /usr/local/Cellar/python/2.7.13/Frameworks/Python.framework/Versions/2.7/lib/python2.7

total 26096

-rw-r–r– 1 crifan admin 22K 5 6 2017 BaseHTTPServer.py

…

lrwxr-xr-x 1 crifan admin 54B 5 6 2017 site-packages -> ../../../../../../../../../lib/python2.7/site-packages

…

然后对于

➜ cbeebies ll /usr/local/Cellar/python/2.7.13/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages

lrwxr-xr-x 1 crifan admin 54B 5 6 2017 /usr/local/Cellar/python/2.7.13/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages -> ../../../../../../../../../lib/python2.7/site-packages

继续去找,才找到:

➜ cbeebies ll /usr/local/lib/python2.7/site-packages/

Automat-0.6.0.dist-info/ exampleproj/ requests-2.18.4.dist-info/

OpenSSL/ hyperlink/ scrapy/

PyDispatcher-2.0.5.dist-info/ hyperlink-17.3.1.dist-info/ selenium/

…

➜ cbeebies ll /usr/local/lib/python2.7/site-packages/scrapy

total 824

-rw-r–r– 1 crifan admin 6B 12 26 20:41 VERSION

-rw-r–r– 1 crifan admin 1.1K 12 26 20:41 __init__.py

-rw-r–r– 1 crifan admin 1.6K 12 26 20:49 __init__.pyc

-rw-r–r– 1 crifan admin 77B 12 26 20:41 __main__.py

-rw-r–r– 1 crifan admin 300B 12 26 20:49 __main__.pyc

-rw-r–r– 1 crifan admin 990B 12 26 20:41 _monkeypatches.py

-rw-r–r– 1 crifan admin 956B 12 26 20:49 _monkeypatches.pyc

-rw-r–r– 1 crifan admin 5.6K 12 26 20:41 cmdline.py

-rw-r–r– 1 crifan admin 7.1K 12 26 20:49 cmdline.pyc

-rw-r–r– 1 crifan admin 259B 12 26 20:41 command.py

-rw-r–r– 1 crifan admin 465B 12 26 20:49 command.pyc

drwxr-xr-x 32 crifan admin 1.0K 12 26 20:49 commands

-rw-r–r– 1 crifan admin 486B 12 26 20:41 conf.py

-rw-r–r– 1 crifan admin 602B 12 26 20:49 conf.pyc

…

drwxr-xr-x 4 crifan admin 128B 12 26 20:49 templates

drwxr-xr-x 80 crifan admin 2.5K 12 26 20:49 utils

drwxr-xr-x 8 crifan admin 256B 12 26 20:49 xlib

➜ cbeebies ll /usr/local/lib/python2.7/site-packages/scrapy/cmdline.py

-rw-r–r– 1 crifan admin 5.6K 12 26 20:41 /usr/local/lib/python2.7/site-packages/scrapy/cmdline.py

然后看看:

➜ cbeebies cat /usr/local/lib/python2.7/site-packages/scrapy/cmdline.py

from __future__ import print_function

import sys, os

import optparse

import cProfile

import inspect

import pkg_resources

import scrapy

from scrapy.crawler import CrawlerProcess

from scrapy.commands import ScrapyCommand

from scrapy.exceptions import UsageError

from scrapy.utils.misc import walk_modules

from scrapy.utils.project import inside_project, get_project_settings

from scrapy.settings.deprecated import check_deprecated_settings

def _iter_command_classes(module_name):

# TODO: add `name` attribute to commands and and merge this function with

# scrapy.utils.spider.iter_spider_classes

for module in walk_modules(module_name):

for obj in vars(module).values():

if inspect.isclass(obj) and \

issubclass(obj, ScrapyCommand) and \

obj.__module__ == module.__name__ and \

not obj == ScrapyCommand:

yield obj

…

if __name__ == ‘__main__’:

execute()

➜ cbeebies

然后可以继续去配置了:

script path:/usr/local/lib/python2.7/site-packages/scrapy/cmdline.py

parameter: crawl Cbeebies

再去调试试试:

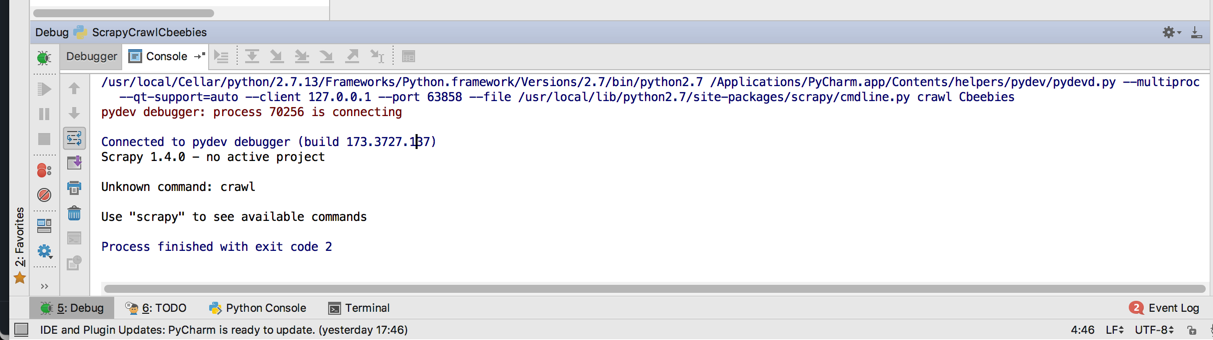

结果出错:

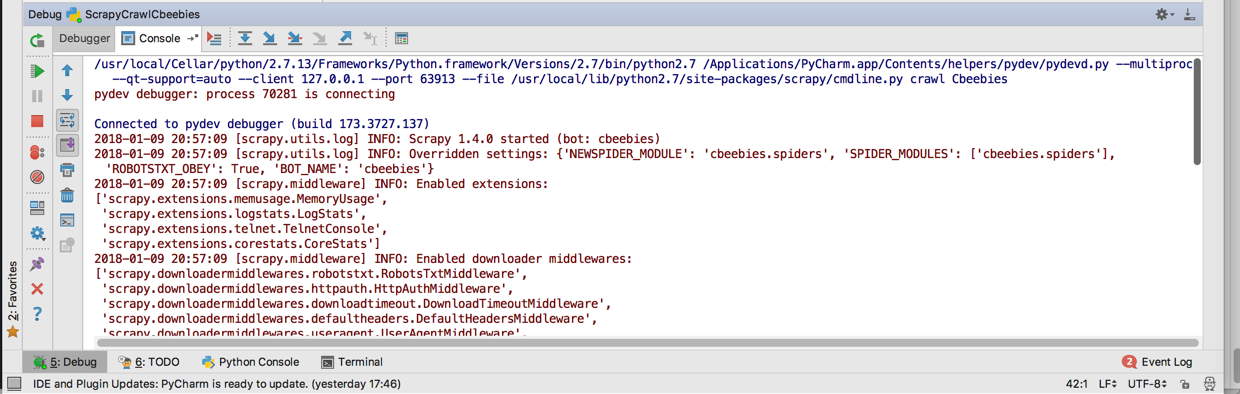

/usr/local/Cellar/python/2.7.13/Frameworks/Python.framework/Versions/2.7/bin/python2.7 /Applications/PyCharm.app/Contents/helpers/pydev/pydevd.py –multiproc –qt-support=auto –client 127.0.0.1 –port 63858 –file /usr/local/lib/python2.7/site-packages/scrapy/cmdline.py crawl Cbeebies

pydev debugger: process 70256 is connecting

Connected to pydev debugger (build 173.3727.137)

Scrapy 1.4.0 – no active project

Unknown command: crawl

Use “scrapy” to see available commands

Process finished with exit code 2

好像说是:没有指定对应的项目?

参考:

https://stackoverflow.com/questions/21788939/how-to-use-pycharm-to-debug-scrapy-projects

好像是需要设置:working directory的

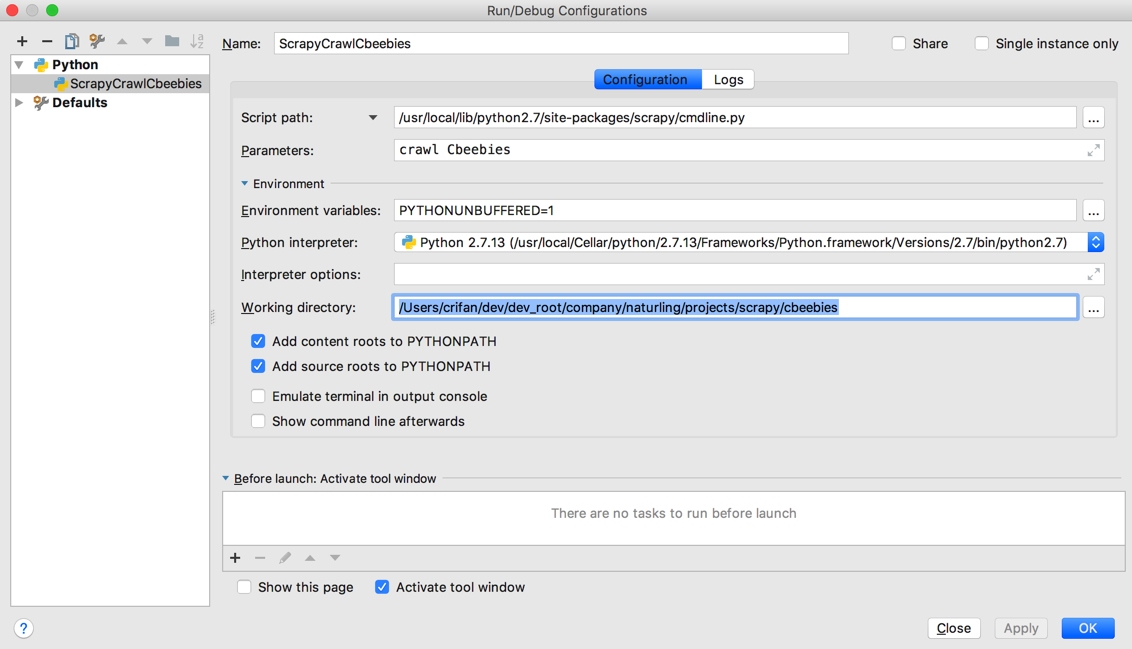

去加上:

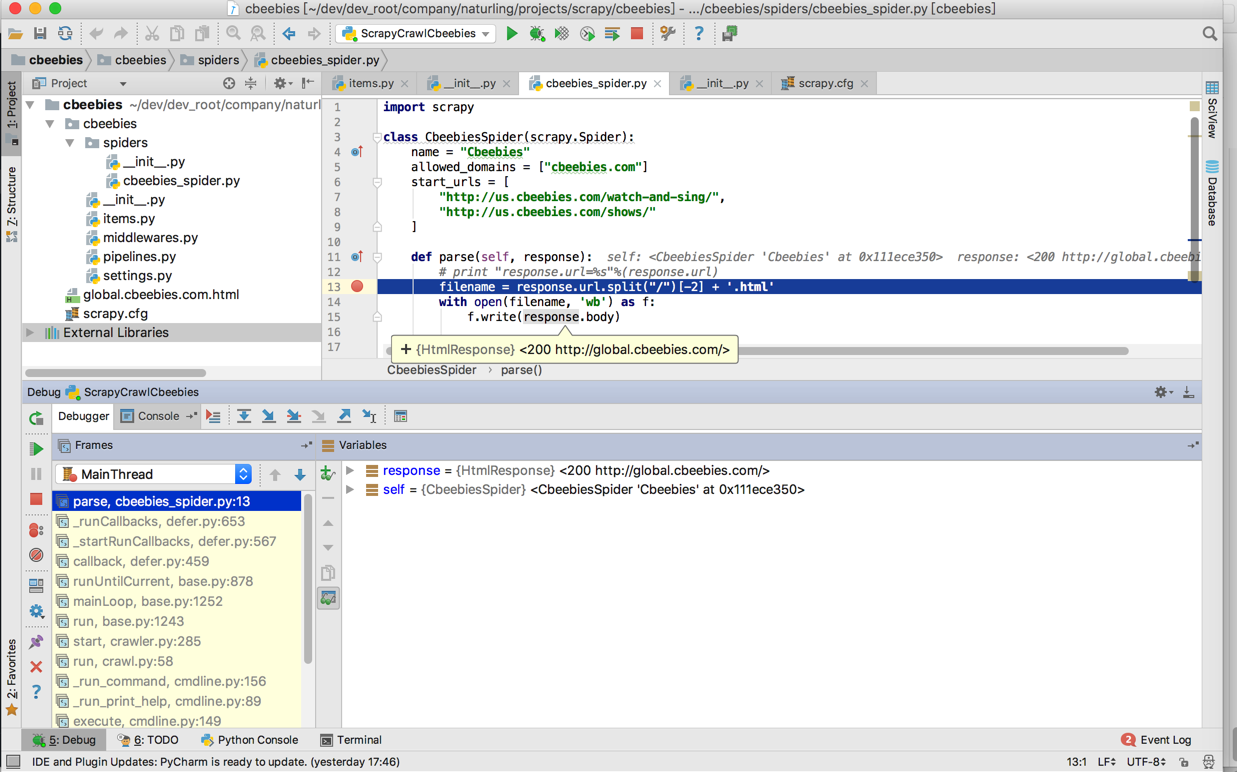

然后再去调试:

果然可以开始调试运行了:

然后到断点就停下来了:

效果真心好用啊。

接下来就可以继续畅快的去调试了。

注:

另外可以参考:

pycharm下虚拟环境执行并调试scrapy爬虫程序 – 简书

pycharm下打开、执行并调试scrapy爬虫程序 – 博客堂 – CSDN博客

Crawling with Scrapy – How to Debug Your Spider – Scraping Authority

去创建自己的scrapy的运行脚本,利用cmdline去运行,理论上应该也是可以的。就不去试了。