折腾:

【已解决】getUserMedia后用MediaRecorder去获取麦克风录音数据

期间,用相关代码:

<code> function testMediaRecorder(mediaStream){

console.log("testMediaRecorder: mediaStream=%o", mediaStream);

const options = {mimeType: 'audio/webm'};

if (MediaRecorder.isTypeSupported(options.mimeType)) {

console.log("support options=%o", options);

} else {

console.log(options.mimeType + ' is not Supported');

}

const recordedChunks = [];

const mediaRecorder = new MediaRecorder(mediaStream, options);

console.log("mediaRecorder=%o", mediaRecorder);

mediaRecorder.addEventListener('dataavailable', function(e) {

console.log("dataavailable: e.data.size=%d, e.data=%o", e.data.size, e.data);

if (e.data.size > 0) {

recordedChunks.push(e.data);

console.log("recordedChunks=%o", recordedChunks);

}

if (shouldStop === true && stopped === false) {

mediaRecorder.stop();

stopped = true;

console.log("stopped=%s", stopped);

}

});

mediaRecorder.addEventListener('stop', function() {

// downloadLink.href = URL.createObjectURL(new Blob(recordedChunks));

// downloadLink.download = 'acetest.wav';

var blobFile = URL.createObjectURL(new Blob(recordedChunks));

console.log("blobFile=%o", blobFile);

$("#downloadSpeakAudio").attr("href", speakAudioFilename);

var curDate = new Date();

console.log("curDate=%o", curDate);

var curDatetimeStr = curDate.Format("yyyyMMdd_HHmmss");

console.log("curDatetimeStr=%o", curDatetimeStr);

var speakAudioFilename = curDatetimeStr + ".wav"

console.log("speakAudioFilename=%o", speakAudioFilename);

$("#downloadSpeakAudio").attr("download", speakAudioFilename);

});

console.log("before start: mediaRecorder.state=%s", mediaRecorder.state);

mediaRecorder.start();

// mediaRecorder.start(100);

console.log("after start: mediaRecorder.state=%s", mediaRecorder.state);

}

</code>但是始终无法执行到:dataavailable

从而无法得到麦克风录音的数据

MediaRecorder dataavailable not work

MediaRecorder.ondataavailable – Web APIs | MDN

javascript – MediaRecorder.ondataavailable – data size is always 0 – Stack Overflow

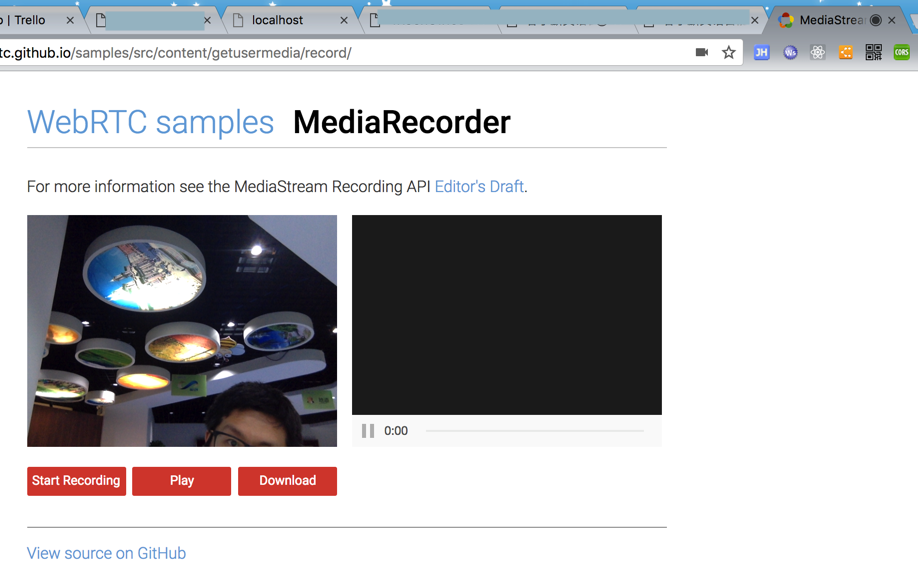

chrome中是可以开启摄像头的:

samples/src/content/getusermedia/record at gh-pages · webrtc/samples

Chrome’s experimental Web Platform Features enabled

MediaRecorder voice

js MediaRecorder voice

How to record and play audio in JavaScript – Bryan Jennings – Medium

Using the MediaStream Recording API – Web APIs | MDN

去调试:

<code>console.log("before start: mediaRecorder.state=%s", mediaRecorder.state);

mediaRecorder.start();

console.log("after start: mediaRecorder.state=%s", mediaRecorder.state);

</code>state是对的:

<code>before start: mediaRecorder.state=inactive main.js:196 after start: mediaRecorder.state=recording </code>

Record Audio and Video with MediaRecorder | Web | Google Developers

MediaRecorder dataavailable not fire

javascript – MediaRecorder ondataavailable work successfully once – Stack Overflow

javascript – MediaStreamRecorder doesn’t fire the ondataavailable event – Stack Overflow

“2.2. Attributes

stream, of type MediaStream, readonly

The MediaStream to be recorded.

mimeType, of type DOMString, readonly

The MIME type [RFC2046] that has been selected as the container for recording. This entry includes all the parameters to the base mimeType. The UA should be able to play back any of the MIME types it supports for recording. For example, it should be able to display a video recording in the HTML <video> tag. The default value for this property is platform-specific.

mimeType specifies the media type and container format for the recording via a type/subtype combination, with the codecs and/or profiles parameters [RFC6381] specified where ambiguity might arise. Individual codecs might have further optional specific parameters.

state, of type RecordingState, readonly

The current state of the MediaRecorder object. When the MediaRecorder is created, the UA MUST set this attribute to inactive.

onstart, of type EventHandler

Called to handle the start event.

onstop, of type EventHandler

Called to handle the stop event.

ondataavailable, of type EventHandler

Called to handle the dataavailable event. The Blob of recorded data is contained in this event and can be accessed via its data attribute.

onpause, of type EventHandler

Called to handle the pause event.

onresume, of type EventHandler

Called to handle the resume event.

onerror, of type EventHandler

Called to handle a MediaRecorderErrorEvent.

videoBitsPerSecond, of type unsigned long, readonly

The value of the Video encoding target bit rate that was passed to the Platform (potentially truncated, rounded, etc), or the calculated one if the user has specified bitsPerSecond.

audioBitsPerSecond, of type unsigned long, readonly

The value of the Audio encoding target bit rate that was passed to the Platform (potentially truncated, rounded, etc), or the calculated one if the user has specified bitsPerSecond.”

Stream capturing with MediaRecorder · TryCatch

换写法:

<code> // mediaRecorder.addEventListener('dataavailable', function(e) {

mediaRecorder.ondataavailable = function(e) {

console.log("dataavailable: e.data.size=%d, e.data=%o", e.data.size, e.data);

if (e.data.size > 0) {

recordedChunks.push(e.data);

console.log("recordedChunks=%o", recordedChunks);

}

if (shouldStop === true && stopped === false) {

mediaRecorder.stop();

stopped = true;

console.log("stopped=%s", stopped);

}

// });

};

</code>结果问题依旧。

参考:

samples/main.js at gh-pages · webrtc/samples

去看看,是不是codecs不支持?

<code> if (MediaRecorder.isTypeSupported(options.mimeType)) {

console.log("support options=%o", options);

} else {

console.log(options.mimeType + ' is not Supported');

options = {mimeType: 'video/webm;codecs=vp8'};

if (!MediaRecorder.isTypeSupported(options.mimeType)) {

console.log(options.mimeType + ' is not Supported');

options = {mimeType: 'video/webm'};

if (!MediaRecorder.isTypeSupported(options.mimeType)) {

console.log(options.mimeType + ' is not Supported');

options = {mimeType: ''};

}

}

}

</code>显示是支持的:

support options={mimeType: “video/webm;codecs=vp9”}

改为:

<code>// mediaRecorder.start(); mediaRecorder.start(100); </code>

结果好像就可以收到数据了:

且点击停止,可以去获取到文件了?

<code>recordedChunks=(105) [Blob(653), Blob(689), Blob(780), Blob(780), Blob(780), Blob(780), Blob(788), Blob(777), Blob(780), Blob(780), Blob(780), Blob(780), Blob(854), Blob(731), Blob(769), Blob(780), Blob(783), Blob(783), Blob(783), Blob(868), Blob(817), Blob(793), Blob(797), Blob(787), Blob(783), Blob(789), Blob(768), Blob(761), Blob(812), Blob(809), Blob(771), Blob(797), Blob(789), Blob(791), Blob(787), Blob(787), Blob(787), Blob(787), Blob(787), Blob(769), Blob(720), Blob(838), Blob(821), Blob(787), Blob(787), Blob(776), Blob(792), Blob(759), Blob(692), Blob(786), Blob(785), Blob(780), Blob(780), Blob(780), Blob(804), Blob(833), Blob(850), Blob(787), Blob(787), Blob(787), Blob(787), Blob(787), Blob(787), Blob(787), Blob(772), Blob(720), Blob(844), Blob(812), Blob(784), Blob(749), Blob(753), Blob(790), Blob(746), Blob(900), Blob(782), Blob(693), Blob(755), Blob(800), Blob(879), Blob(795), Blob(672), Blob(785), Blob(785), Blob(780), Blob(780), Blob(780), Blob(780), Blob(780), Blob(780), Blob(810), Blob(791), Blob(753), Blob(854), Blob(773), Blob(831), Blob(817), Blob(787), Blob(787), Blob(787), Blob(772), …] main.js:208 blobFile="blob:null/f24084d6-8674-4b1e-b7f7-70081c69c022" main.js:212 curDate=Wed May 16 2018 18:26:42 GMT+0800 (CST) main.js:214 curDatetimeStr="20180516_182642" main.js:216 speakAudioFilename="20180516_182642.wav" </code>

至此,好像是:

给mediaRecorder的start添加一个毫秒的时间,好像dataavailable就能执行到了

去查查start参数

MediaRecorder js api

MediaRecorder – Web APIs | MDN

“MediaRecorder.start()

Begins recording media; this method can optionally be passed a timeslice argument with a value in milliseconds. If this is specified, the media will be captured in separate chunks of that duration, rather than the default behavior of recording the media in a single large chunk.”

start中可以传入参数,相当于interval,指的是多少毫秒执行一次,捕获一次,

而默认不传则是:录音保存为一个大的chunk数据块

MediaRecorder – Web API 接口 | MDN

MediaRecorder.start() – Web API 接口 | MDN

“timeslice Optional

The number of milliseconds to record into each Blob. If this parameter isn’t included, the entire media duration is recorded into a single Blob unless the requestData()method is called to obtain the Blob and trigger the creation of a new Blob into which the media continues to be recorded.”

所以感觉是:

之前其实已经在录音了,但是需要去调用:requestData(),才能获取录音数据?

MediaRecorder() – Web APIs | MDN

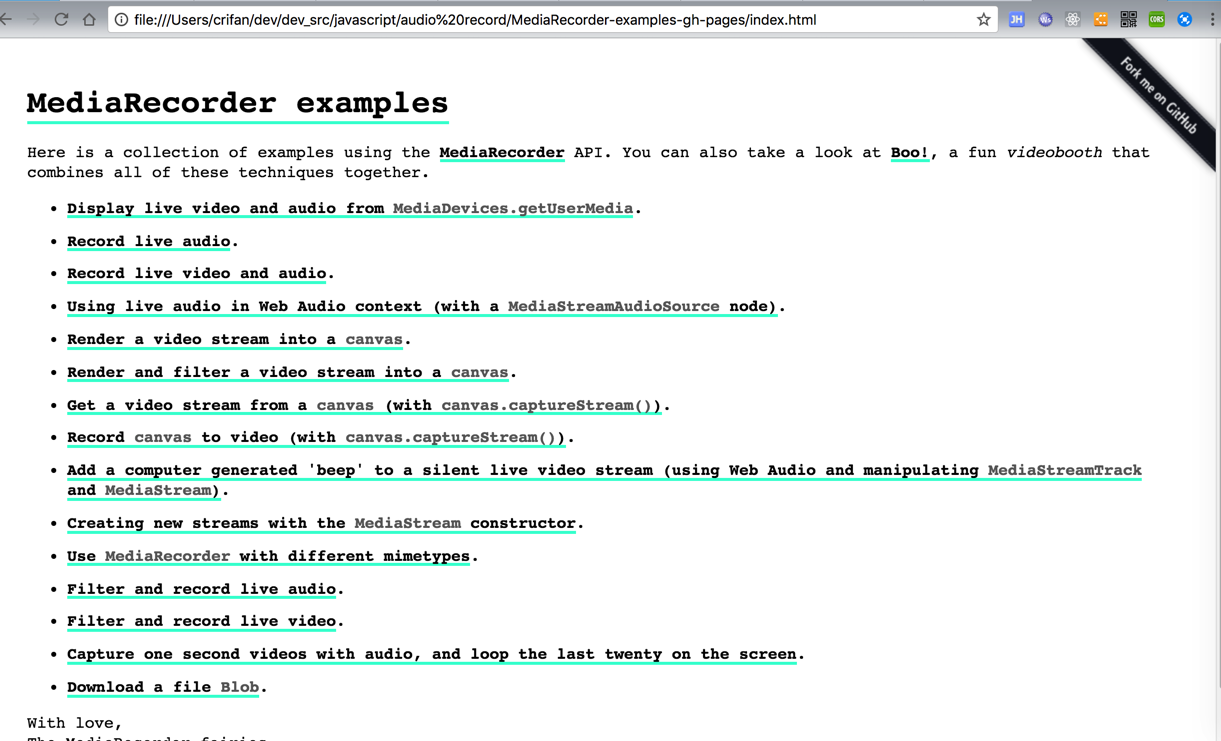

mozdevs/MediaRecorder-examples: MediaRecorder examples

去下载

https://codeload.github.com/mozdevs/MediaRecorder-examples/zip/gh-pages

后,试试demo

期间看到:

Stream capturing with MediaRecorder · TryCatch

的codes:

<code># audio codecs audio/webm audio/webm;codecs=opus # video codecs video/webm video/webm;codecs=avc1 video/webm;codecs=h264 video/webm;codecs=h264,opus video/webm;codecs=vp8 video/webm;codecs=vp8,opus video/webm;codecs=vp9 video/webm;codecs=vp9,opus video/webm;codecs=h264,vp9,opus video/webm;codecs=vp8,vp9,opus video/x-matroska video/x-matroska;codecs=avc1 </code>

而我此处参考别人代码用的是:

<code>const options = {mimeType: 'video/webm;codecs=vp9’};

.getUserMedia({ audio: true, video: false })

</code>感觉是:

只是申请audio,没有video,但是编码却设置的是video的

好像搞错了啊。

改为audio:

<code>const options = {mimeType: 'audio/webm'};

</code>结果:

现象依旧:

ondataavailable没用,但是如果start设置了timeslice后,又能收到数据

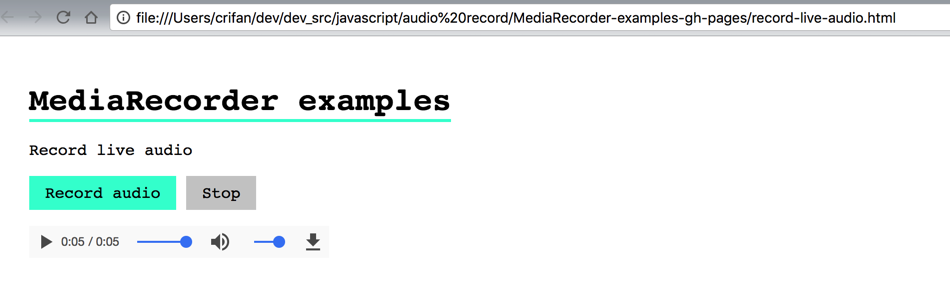

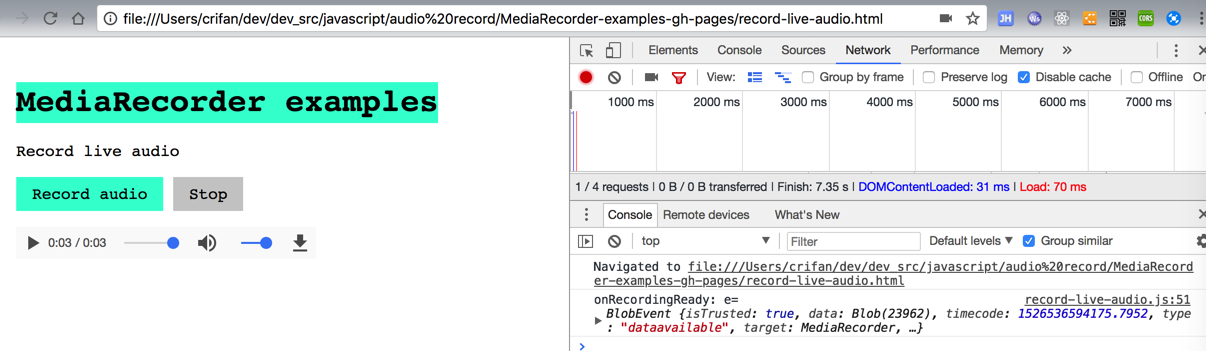

试了试demo的

是可以录音的

研究了代码,只有recorder去stop时,才会调用data-available

那也去试试官网说的:

MediaRecorder – Web APIs | MDN

“MediaRecorder.requestData()

Requests a Blob containing the saved data received thus far (or since the last time requestData() was called. After calling this method, recording continues, but in a new Blob.”

调用requestData,看看是否可以获得一次性的录音数据

看看是否能执行到dataavailable

代码:

<code> $( "#stopSpeak" ).on( "click", function() {

console.log("#stopSpeak clicked");

shouldStop = true;

console.log("shouldStop=%s", shouldStop);

mediaRecorder.requestData();

});

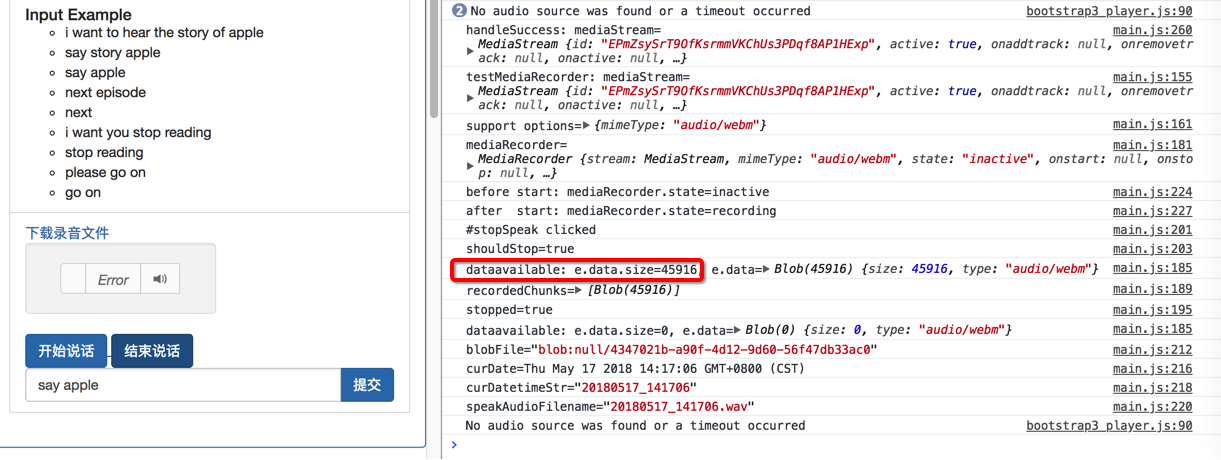

</code>效果:

的确是的:

<code>#stopSpeak clicked

main.js:203 shouldStop=true

main.js:185 dataavailable: e.data.size=45916, e.data=Blob(45916) {size: 45916, type: "audio/webm"}

main.js:189 recordedChunks=[Blob(45916)]

main.js:195 stopped=true

main.js:185 dataavailable: e.data.size=0, e.data=Blob(0) {size: 0, type: "audio/webm"}

main.js:212 blobFile="blob:null/4347021b-a90f-4d12-9d60-56f47db33ac0"

main.js:216 curDate=Thu May 17 2018 14:17:06 GMT+0800 (CST)

main.js:218 curDatetimeStr="20180517_141706"

main.js:220

</code>

不过还是继续参考人家的demo的写法

<code>// Stopping the recorder will eventually trigger the `dataavailable` event and we can complete the recording process recorder.stop(); </code>

写的很清楚了,就是我上面的猜测

MediaRecorder的stop,才会让dataavailable收到数据

然后用代码:

<code> function testMediaRecorder(mediaStream){

console.log("testMediaRecorder: mediaStream=%o", mediaStream);

// const options = {mimeType: 'video/webm;codecs=vp9'};

const options = {mimeType: 'audio/webm'};

if (MediaRecorder.isTypeSupported(options.mimeType)) {

console.log("support options=%o", options);

} else {

console.log(options.mimeType + ' is not Supported');

// options = {mimeType: 'video/webm;codecs=vp8'};

// if (!MediaRecorder.isTypeSupported(options.mimeType)) {

// console.log(options.mimeType + ' is not Supported');

// options = {mimeType: 'video/webm'};

// if (!MediaRecorder.isTypeSupported(options.mimeType)) {

// console.log(options.mimeType + ' is not Supported');

// options = {mimeType: ''};

// }

// }

}

// const recordedChunks = [];

const mediaRecorder = new MediaRecorder(mediaStream, options);

console.log("mediaRecorder=%o", mediaRecorder);

mediaRecorder.addEventListener('dataavailable', function(e) {

// mediaRecorder.ondataavailable = function(e) {

console.log("dataavailable: e.data.size=%d, e.data=%o", e.data.size, e.data);

// if (e.data.size > 0) {

// recordedChunks.push(e.data);

// console.log("recordedChunks=%o", recordedChunks);

// }

// if (shouldStop === true && stopped === false) {

// mediaRecorder.stop();

// stopped = true;

// console.log("stopped=%s", stopped);

// }

var inputAudio = document.getElementById('inputAudio');

// var inputAudio = $("#inputAudio");

console.log("inputAudio=o", inputAudio);

// e.data contains a blob representing the recording

var recordedBlob = e.data;

console.log("recordedBlob=o", recordedBlob);

// try {

// inputAudio.srcObject = recordedBlob;

// console.log("inputAudio.srcObject=%o", inputAudio.srcObject);

// } catch (error) {

// console.log("try set input audio srcObject error=%o", error);

//inputAudio.src = window.URL.createObjectURL(recordedBlob);

inputAudio.src = URL.createObjectURL(recordedBlob);

console.log("inputAudio.src=%o", inputAudio.src);

// }

inputAudio.load();

inputAudio.play();

});

// };

$( "#stopSpeak" ).on( "click", function() {

console.log("#stopSpeak clicked");

// shouldStop = true;

// console.log("shouldStop=%s", shouldStop);

// mediaRecorder.requestData();

mediaRecorder.stop();

});

// mediaRecorder.addEventListener('stop', function() {

// console.log("mediaRecorder stoped");

// // downloadLink.href = URL.createObjectURL(new Blob(recordedChunks));

// // downloadLink.download = 'acetest.wav';

// var blobFile = URL.createObjectURL(new Blob(recordedChunks));

// console.log("blobFile=%o", blobFile);

// $("#downloadSpeakAudio").attr("href", speakAudioFilename);

// var curDate = new Date();

// console.log("curDate=%o", curDate);

// var curDatetimeStr = curDate.Format("yyyyMMdd_HHmmss");

// console.log("curDatetimeStr=%o", curDatetimeStr);

// var speakAudioFilename = curDatetimeStr + ".wav"

// console.log("speakAudioFilename=%o", speakAudioFilename);

// $("#downloadSpeakAudio").attr("download", speakAudioFilename);

// });

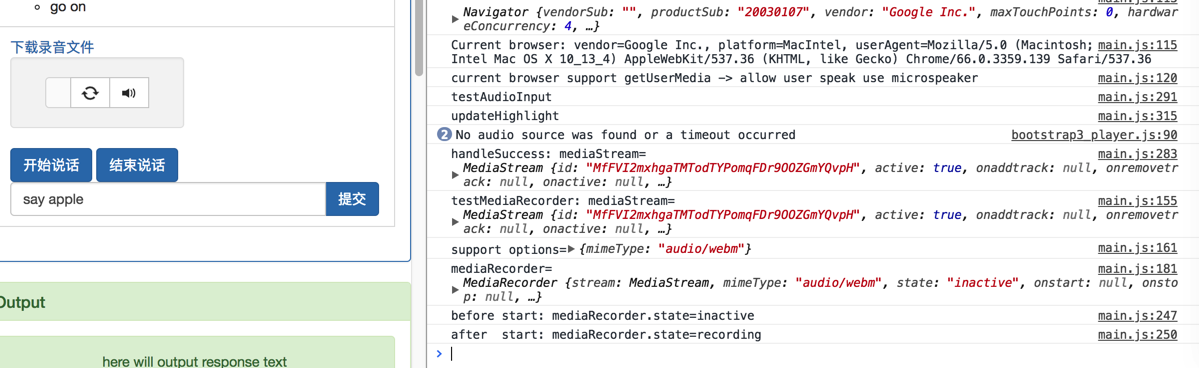

console.log("before start: mediaRecorder.state=%s", mediaRecorder.state);

mediaRecorder.start();

// mediaRecorder.start(100);

console.log("after start: mediaRecorder.state=%s", mediaRecorder.state);

}

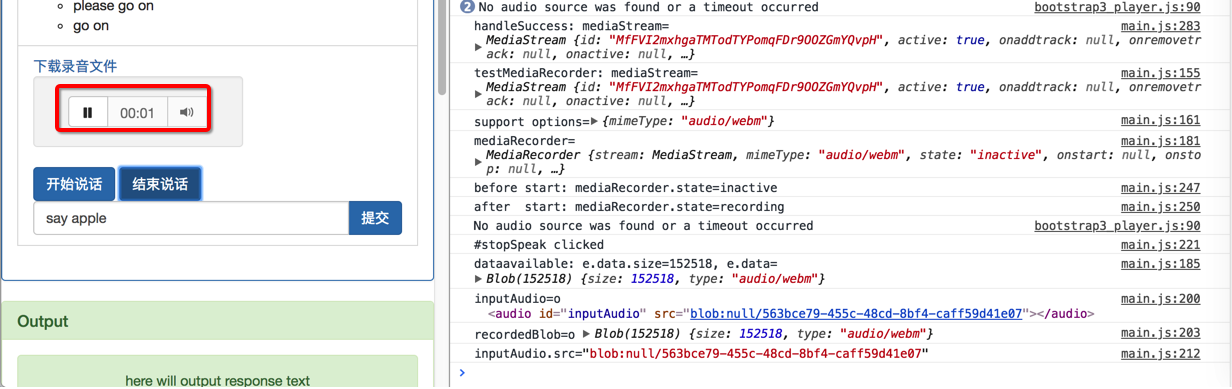

</code>是可以获得录音数据并点击播放的:

点击结束说话,是可以听到录音的:

<code>#stopSpeak clicked

main.js:185 dataavailable: e.data.size=152518, e.data=Blob(152518) {size: 152518, type: "audio/webm"}

main.js:200 inputAudio=o <audio id="inputAudio" src="blob:null/563bce79-455c-48cd-8bf4-caff59d41e07"></audio>

main.js:203 recordedBlob=o Blob(152518) {size: 152518, type: "audio/webm"}

main.js:212 inputAudio.src="blob:null/563bce79-455c-48cd-8bf4-caff59d41e07"

</code>

【总结】

此处,用代码:

<code>

function testMediaRecorder(mediaStream){

console.log("testMediaRecorder: mediaStream=%o", mediaStream);

const options = {mimeType: 'audio/webm'};

if (MediaRecorder.isTypeSupported(options.mimeType)) {

console.log("support options=%o", options);

} else {

console.log(options.mimeType + ' is not Supported');

}

const mediaRecorder = new MediaRecorder(mediaStream, options);

console.log("mediaRecorder=%o", mediaRecorder);

mediaRecorder.addEventListener('dataavailable', function(e) {

console.log("dataavailable: e.data.size=%d, e.data=%o", e.data.size, e.data);

var inputAudio = document.getElementById('inputAudio');

console.log("inputAudio=o", inputAudio);

// e.data contains a blob representing the recording

var recordedBlob = e.data;

console.log("recordedBlob=o", recordedBlob);

inputAudio.src = URL.createObjectURL(recordedBlob);

console.log("inputAudio.src=%o", inputAudio.src);

inputAudio.load();

inputAudio.play();

});

$( "#stopSpeak" ).on( "click", function() {

console.log("#stopSpeak clicked");

mediaRecorder.stop();

});

console.log("before start: mediaRecorder.state=%s", mediaRecorder.state);

mediaRecorder.start();

console.log("after start: mediaRecorder.state=%s", mediaRecorder.state);

}

</code>是可以获取录音数据的。

然后有几点要说明的:

1.可以把:

<code>mediaRecorder.addEventListener('dataavailable', function(e) {

…

});

</code>写成:

<code>mediaRecorder.ondataavailable = function(e) {

...

};

</code>也是一样的。

即:

dataavailable的eventlistener

和

ondataavailable的event是一样的。

后记:

MediaRecorder.ondataavailable – Web APIs | MDN

也解释了:

<code>MediaRecorder.ondataavailable = function(event) { ... }

MediaRecorder.addEventListener('dataavailable', function(event) { ... })

</code>2.如果用:

<code>mediaRecorder.start(100); </code>

即调用start传递一个100毫秒的timeslice,则每隔100毫秒,都会触发dataavailable的event

-》此时得到的数据则是每100毫秒这段时间的录音的blob数据

3.只是普通的

<code>mediaRecorder.start(); </code>

的话,则一直都不会触发

dataavailable的event

只有(比如点击一个停止按钮)去调用:

<code>mediaRecorder.stop(); </code>

才会触发dataavailable

-》此时,得到的是录音的blob(数据文件)

4.另外:如果去(比如点击一个停止按钮)调用:

<code>mediaRecorder.requestData(); </code>

也是和stop类似效果,也会触发dataavailable的。

转载请注明:在路上 » 【已解决】html5中MediaRecorder的dataavailable没有执行获取不到录音数据