折腾:

期间,已经基本上搞清楚了思路和选择

用Flask去封装百度的语音合成的接口,

内部是用Python的SDK

是直接用pip去安装

且要合适的处理token过期的事情:

如果返回dict,且发现是err_no是502的话,则确定是token无效或过期

则使用refresh_token去重新刷新获得有效的token

重新再去尝试一次

然后正常的话,返回得到mp3的二进制数据

然后弄好了上面的部分,再去考虑:

如何把mp3二进制数据,保存到哪里,返回可访问的url给外部接口使用

后续封装出来的接口,倒是也可以考虑支持两种:

- 直接返回mp3的url

- 返回mp3的二进制数据文件

而返回的类型,可以通过输入参数指定

先去找找:

其中先要去封装百度的语音合成api

其中先要去获取token

要在Flask的服务端,用http请求:

python http

所以去用requests

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | ➜ server pipenv install requestsInstalling requests…Looking in indexes: https://pypi.python.org/simpleCollecting requests Using cached https://files.pythonhosted.org/packages/49/df/50aa1999ab9bde74656c2919d9c0c085fd2b3775fd3eca826012bef76d8c/requests-2.18.4-py2.py3-none-any.whlCollecting idna<2.7,>=2.5 (from requests) Using cached https://files.pythonhosted.org/packages/27/cc/6dd9a3869f15c2edfab863b992838277279ce92663d334df9ecf5106f5c6/idna-2.6-py2.py3-none-any.whlCollecting urllib3<1.23,>=1.21.1 (from requests) Using cached https://files.pythonhosted.org/packages/63/cb/6965947c13a94236f6d4b8223e21beb4d576dc72e8130bd7880f600839b8/urllib3-1.22-py2.py3-none-any.whlCollecting chardet<3.1.0,>=3.0.2 (from requests) Using cached https://files.pythonhosted.org/packages/bc/a9/01ffebfb562e4274b6487b4bb1ddec7ca55ec7510b22e4c51f14098443b8/chardet-3.0.4-py2.py3-none-any.whlCollecting certifi>=2017.4.17 (from requests) Using cached https://files.pythonhosted.org/packages/7c/e6/92ad559b7192d846975fc916b65f667c7b8c3a32bea7372340bfe9a15fa5/certifi-2018.4.16-py2.py3-none-any.whlInstalling collected packages: idna, urllib3, chardet, certifi, requestsSuccessfully installed certifi-2018.4.16 chardet-3.0.4 idna-2.6 requests-2.18.4 urllib3-1.22Adding requests to Pipfile's [packages]…Pipfile.lock (aa619c) out of date, updating to (b165df)…Locking [dev-packages] dependencies…Locking [packages] dependencies…Updated Pipfile.lock (b165df)!Installing dependencies from Pipfile.lock (b165df)… 🐍 ▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉ 23/23 — 0 |

期间去搞清楚python3的url的encode:

【已解决】Python中给url中的字符串进行encode编码

【总结】

最后就基本上封装出来对应的:

获取token

刷新token

返回合成的语音数据

了,

而如何加到Flask的API中,对外提供访问接口,则是普通的做法,此处再去加上tmp的file的api。

最后代码如下:

config.py

1 2 3 4 5 | # Audio Synthesis / TTSBAIDU_API_KEY = "SNxxxxxxcaz"BAIDU_SECRET_KEY = "47d7cxxxxx7ba"AUDIO_TEMP_FOLDER = "tmp/audio" |

app.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 | import osimport ioimport refrom urllib.parse import quoteimport jsonimport uuidimport requests################################################################################# Global Definitions################################################################################"""http://ai.baidu.com/docs#/TTS-API/top 500 不支持输入 501 输入参数不正确 502 token验证失败 503 合成后端错误"""BAIDU_ERR_NOT_SUPPORT_PARAM = 500BAIDU_ERR_PARAM_INVALID = 501BAIDU_ERR_TOKEN_INVALID = 502BAIDU_ERR_BACKEND_SYNTHESIS_FAILED = 503################################################################################# Global Variables################################################################################log = Noneapp = None"""{ "access_token": "24.569b3b5b470938a522ce60d2e2ea2506.2592000.1528015602.282335-11192483", "session_key": "9mzdDoR4p/oer6IHdcpJwlbK6tpH5rWqhjMi708ubA8vTgu1OToODZAXf7/963ZpEG7+yEdcdCxXq0Yp9VoSgFCFOSGEIA==", "scope": "public audio_voice_assistant_get audio_tts_post wise_adapt lebo_resource_base lightservice_public hetu_basic lightcms_map_poi kaidian_kaidian ApsMisTest_Test权限 vis-classify_flower lpq_开放 cop_helloScope ApsMis_fangdi_permission smartapp_snsapi_base", "refresh_token": "25.5acf5c4d9fdfdbe577e75f3f2fd137b8.315360000.1840783602.282335-11192483", "session_secret": "121fe91236ef88ab24b2ecab479427ea", "expires_in": 2592000}"""gCurBaiduRespDict = {} # get baidu token resp dictgTempAudioFolder = “"################################################################################# Global Function################################################################################def generateUUID(prefix = ""): generatedUuid4 = uuid.uuid4() generatedUuid4Str = str(generatedUuid4) newUuid = prefix + generatedUuid4Str return newUuid#----------------------------------------# Audio Synthesis / TTS#----------------------------------------def createAudioTempFolder(): """create foler to save later temp audio files""" global log, gTempAudioFolder # init audio temp folder for later store temp audio file audioTmpFolder = app.config["AUDIO_TEMP_FOLDER"] log.info("audioTmpFolder=%s", audioTmpFolder) curFolderAbsPath = os.getcwd() #'/Users/crifan/dev/dev_root/company/xxx/projects/robotDemo/server' log.info("curFolderAbsPath=%s", curFolderAbsPath) audioTmpFolderFullPath = os.path.join(curFolderAbsPath, audioTmpFolder) log.info("audioTmpFolderFullPath=%s", audioTmpFolderFullPath) if not os.path.exists(audioTmpFolderFullPath): os.makedirs(audioTmpFolderFullPath) gTempAudioFolder = audioTmpFolderFullPath log.info("gTempAudioFolder=%s", gTempAudioFolder)def initAudioSynthesis(): """ init audio synthesis related: init token :return: """ getBaiduToken() createAudioTempFolder()def getBaiduToken(): """get baidu token""" global app, log, gCurBaiduRespDict getBaiduTokenUrlTemplate = "https://openapi.baidu.com/oauth/2.0/token?grant_type=client_credentials&client_id=%s&client_secret=%s" getBaiduTokenUrl = getBaiduTokenUrlTemplate % (app.config["BAIDU_API_KEY"], app.config["BAIDU_SECRET_KEY"]) log.info("getBaiduTokenUrl=%s", getBaiduTokenUrl) #https://openapi.baidu.com/oauth/2.0/token?grant_type=client_credentials&client_id=SNjsxxxxcaz&client_secret=47d7c0xxx7ba resp = requests.get(getBaiduTokenUrl) log.info("resp=%s", resp) respJson = resp.json() log.info("respJson=%s", respJson) #{'access_token': '24.8f1f35xxxx5.2592000.1xxx0.282335-11192483', 'session_key': '9mzxxxp5ZUHafqq8m+6KwgZmw==', 'scope': 'public audio_voice_assistant_get audio_tts_post wise_adapt lebo_resource_base lightservice_public hetu_basic lightcms_map_poi kaidian_kaidian ApsMisTest_Test权限 vis-classify_flower lpq_开放 cop_helloScope ApsMis_fangdi_permission smartapp_snsapi_base', 'refresh_token': '25.eb2xxxe.315360000.1841377320.282335-11192483', 'session_secret': 'c0a83630b7b1c46039e360de417d346e', 'expires_in': 2592000} if resp.status_code == 200: gCurBaiduRespDict = respJson log.info("get baidu token resp: %s", gCurBaiduRespDict) else: log.error("error while get baidu token: %s", respJson) #{'error': 'invalid_client', 'error_description': 'Client authentication failed'} #{'error': 'invalid_client', 'error_description': 'unknown client id'} #{'error': 'unsupported_grant_type', 'error_description': 'The authorization grant type is not supported'}def refreshBaiduToken(): """refresh baidu token when current token invalid""" global app, log, gCurBaiduRespDict if gCurBaiduRespDict: refreshBaiduTokenUrlTemplate = "https://openapi.baidu.com/oauth/2.0/token?grant_type=refresh_token&refresh_token=%s&client_id=%s&client_secret=%s" refreshBaiduTokenUrl = refreshBaiduTokenUrlTemplate % (gCurBaiduRespDict["refresh_token"], app.config["BAIDU_API_KEY"], app.config["BAIDU_SECRET_KEY"]) log.info("refreshBaiduTokenUrl=%s", refreshBaiduTokenUrl) #https://openapi.baidu.com/oauth/2.0/token?grant_type=refresh_token&refresh_token=25.1b7xxxxedbb99ea361.31536xx.1841379583.282335-11192483&client_id=SNjsggdYDNWtnlbKhxsPLcaz&client_secret=47dxxxxba resp = requests.get(refreshBaiduTokenUrl) log.info("resp=%s", resp) respJson = resp.json() log.info("respJson=%s", respJson) if resp.status_code == 200: gCurBaiduRespDict = respJson log.info("Ok to refresh baidu token response: %s", gCurBaiduRespDict) else: log.error("error while refresh baidu token: %s", respJson) else: log.error("Can't refresh baidu token for previous not get token")def baiduText2Audio(unicodeText): """call baidu text2audio to generate mp3 audio from text""" global app, log, gCurBaiduRespDict log.info("baiduText2Audio: unicodeText=%s", unicodeText) isOk = False mp3BinData = None errNo = 0 errMsg = "Unknown error" if not gCurBaiduRespDict: errMsg = "Need get baidu token before call text2audio" return isOk, mp3BinData, errNo, errMsg utf8Text = unicodeText.encode("utf-8") log.info("utf8Text=%s", utf8Text) encodedUtf8Text = quote(unicodeText) log.info("encodedUtf8Text=%s", encodedUtf8Text) # http://ai.baidu.com/docs#/TTS-API/top tex = encodedUtf8Text #合成的文本,使用UTF-8编码。小于512个中文字或者英文数字。(文本在百度服务器内转换为GBK后,长度必须小于1024字节) tok = gCurBaiduRespDict["access_token"] #开放平台获取到的开发者access_token(见上面的“鉴权认证机制”段落) cuid = app.config["FLASK_APP_NAME"] #用户唯一标识,用来区分用户,计算UV值。建议填写能区分用户的机器 MAC 地址或 IMEI 码,长度为60字符以内 ctp = 1 #客户端类型选择,web端填写固定值1 lan = "zh" #固定值zh。语言选择,目前只有中英文混合模式,填写固定值zh spd = 5 #语速,取值0-9,默认为5中语速 pit = 5 #音调,取值0-9,默认为5中语调 # vol = 5 #音量,取值0-9,默认为5中音量 vol = 9 per = 0 #发音人选择, 0为普通女声,1为普通男生,3为情感合成-度逍遥,4为情感合成-度丫丫,默认为普通女声 getBaiduSynthesizedAudioTemplate = "http://tsn.baidu.com/text2audio?lan=%s&ctp=%s&cuid=%s&tok=%s&vol=%s&per=%s&spd=%s&pit=%s&tex=%s" getBaiduSynthesizedAudioUrl = getBaiduSynthesizedAudioTemplate % (lan, ctp, cuid, tok, vol, per, spd, pit, tex) log.info("getBaiduSynthesizedAudioUrl=%s", getBaiduSynthesizedAudioUrl) #http://tsn.baidu.com/text2audio?lan=zh&ctp=1&cuid=RobotQA&tok=24.5xxxb5.2592000.1528609737.282335-11192483&vol=5&per=0&spd=5&pit=5&tex=as%20a%20book-collector%2C%20i%20have%20the%20story%20you%20just%20want%20to%20listen%21 resp = requests.get(getBaiduSynthesizedAudioUrl) log.info("resp=%s", resp) respContentType = resp.headers["Content-Type"] respContentTypeLowercase = respContentType.lower() #'audio/mp3' log.info("respContentTypeLowercase=%s", respContentTypeLowercase) if respContentTypeLowercase == "audio/mp3": mp3BinData = resp.content log.info("resp content is binary data of mp3, length=%d", len(mp3BinData)) isOk = True errMsg = "" elif respContentTypeLowercase == "application/json": """ { 'err_detail': 'Invalid params per or lan!', 'err_msg': 'parameter error.', 'err_no': 501, 'err_subcode': 50000, 'tts_logid': 642798357 } { 'err_detail': 'Invalid params per&pdt!', 'err_msg': 'parameter error.', 'err_no': 501, 'err_subcode': 50000, 'tts_logid': 1675521246 } { 'err_detail': 'Access token invalid or no longer valid', 'err_msg': 'authentication failed.', 'err_no': 502, 'err_subcode': 50004, 'tts_logid': 4221215043 } """ log.info("resp content is json -> occur error") isOk = False respDict = resp.json() log.info("respDict=%s", respDict) errNo = respDict["err_no"] errMsg = respDict["err_msg"] + " " + respDict["err_detail"] else: isOk = False errMsg = "Unexpected response content-type: %s" % respContentTypeLowercase return isOk, mp3BinData, errNo, errMsgdef doAudioSynthesis(unicodeText): """ do audio synthesis from unicode text if failed for token invalid/expired, will refresh token to do one more retry """ global app, log, gCurBaiduRespDict isOk = False audioBinData = None errMsg = "" # # for debug # gCurBaiduRespDict["access_token"] = "99.5xxx06.2592000.1528015602.282335-11192483" log.info("doAudioSynthesis: unicodeText=%s", unicodeText) isOk, audioBinData, errNo, errMsg = baiduText2Audio(unicodeText) log.info("isOk=%s, errNo=%d, errMsg=%s", isOk, errNo, errMsg) if isOk: errMsg = "" log.info("got synthesized audio binary data length=%d", len(audioBinData)) else: if errNo == BAIDU_ERR_TOKEN_INVALID: log.warning("Token invalid -> refresh token") refreshBaiduToken() isOk, audioBinData, errNo, errMsg = baiduText2Audio(unicodeText) log.info("after refresh token: isOk=%ss, errNo=%s, errMsg=%s", isOk, errNo, errMsg) else: log.warning("try synthesized audio occur error: errNo=%d, errMsg=%s", errNo, errMsg) audioBinData = None log.info("return isOk=%s, errMsg=%s", isOk, errMsg) if audioBinData: log.info("audio binary bytes=%d", len(audioBinData)) return isOk, audioBinData, errMsgdef testAudioSynthesis(): global app, log, gCurBaiduRespDict, gTempAudioFolder testInputUnicodeText = u"as a book-collector, i have the story you just want to listen!" isOk, audioBinData, errMsg = doAudioSynthesis(testInputUnicodeText) if isOk: audioBinDataLen = len(audioBinData) log.info("Now will save audio binary data %d bytes to file", audioBinDataLen) # 1. save mp3 binary data into tmp file newUuid = generateUUID() log.info("newUuid=%s", newUuid) tempFilename = newUuid + ".mp3" log.info("tempFilename=%s", tempFilename) if not gTempAudioFolder: createAudioTempFolder() tempAudioFullname = os.path.join(gTempAudioFolder, tempFilename) #'/Users/crifan/dev/dev_root/company/xxx/projects/robotDemo/server/tmp/audio/2aba73d1-f8d0-4302-9dd3-d1dbfad44458.mp3' log.info("tempAudioFullname=%s", tempAudioFullname) with open(tempAudioFullname, 'wb') as tmpAudioFp: log.info("tmpAudioFp=%s", tmpAudioFp) tmpAudioFp.write(audioBinData) tmpAudioFp.close() log.info("Done to write audio data into file of %d bytes", audioBinDataLen) # TODO: # 2. use celery to delay delete tmp file else: log.warning("Fail to get synthesis audio for errMsg=%s", errMsg)#----------------------------------------# Flask API#----------------------------------------def sendFile(fileBytes, contentType, outputFilename): """Flask API use this to send out file (to browser, browser can directly download file)""" return send_file( io.BytesIO(fileBytes), # io.BytesIO(fileObj.read()), mimetype=contentType, as_attachment=True, attachment_filename=outputFilename )################################################################################# Global Init App################################################################################app = Flask(__name__)CORS(app)app.config.from_object('config.DevelopmentConfig')# app.config.from_object('config.ProductionConfig')logFormatterStr = app.config["LOG_FORMAT"]logFormatter = logging.Formatter(logFormatterStr)fileHandler = RotatingFileHandler( app.config['LOG_FILE_FILENAME'], maxBytes=app.config["LOF_FILE_MAX_BYTES"], backupCount=app.config["LOF_FILE_BACKUP_COUNT"], encoding="UTF-8")fileHandler.setLevel(logging.DEBUG)fileHandler.setFormatter(logFormatter)app.logger.addHandler(fileHandler)app.logger.setLevel(logging.DEBUG) # set root log levellog = app.loggerlog.debug("app=%s", app)log.info("app.config=%s", app.config)api = Api(app)log.info("api=%s", api)aiContext = Context()log.info("aiContext=%s", aiContext)initAudioSynthesis()# testAudioSynthesis()class RobotQaAPI(Resource): def processResponse(self, respDict): """ process response dict before return generate audio for response text part """ global log, gTempAudioFolder tmpAudioUrl = "" unicodeText = respDict["data"]["response"]["text"] log.info("unicodeText=%s") if not unicodeText: log.info("No response text to do audio synthesis") return jsonify(respDict) isOk, audioBinData, errMsg = doAudioSynthesis(unicodeText) if isOk: audioBinDataLen = len(audioBinData) log.info("audioBinDataLen=%s", audioBinDataLen) # 1. save mp3 binary data into tmp file newUuid = generateUUID() log.info("newUuid=%s", newUuid) tempFilename = newUuid + ".mp3" log.info("tempFilename=%s", tempFilename) if not gTempAudioFolder: createAudioTempFolder() tempAudioFullname = os.path.join(gTempAudioFolder, tempFilename) log.info("tempAudioFullname=%s", tempAudioFullname) # 'xxx/tmp/audio/2aba73d1-f8d0-4302-9dd3-d1dbfad44458.mp3' with open(tempAudioFullname, 'wb') as tmpAudioFp: log.info("tmpAudioFp=%s", tmpAudioFp) tmpAudioFp.write(audioBinData) tmpAudioFp.close() log.info("Saved %d bytes data into temp audio file %s", audioBinDataLen, tempAudioFullname) # TODO: # 2. use celery to delay delete tmp file # generate temp audio file url # /tmp/audio app.config["FILE_URL_HOST"], app.config["FLASK_PORT"], tempFilename) log.info("tmpAudioUrl=%s", tmpAudioUrl) respDict["data"]["response"]["audioUrl"] = tmpAudioUrl else: log.warning("Fail to get synthesis audio for errMsg=%s", errMsg) log.info("respDict=%s", respDict) return jsonify(respDict)def get(self): respDict = { "code": 200, "message": "generate response ok", "data": { "input": "", "response": { "text": "", "audioUrl": "" }, "control": "", "audio": {} } } 。。。 respDict["data"]["audio"] = { "contentType": audioFileObj.contentType, "name": audioFileObj.filename, "size": audioFileObj.length, (app.config["FILE_URL_HOST"], app.config["FLASK_PORT"], audioFileObj._id, encodedFilename) } log.info("respDict=%s", respDict) return self.processResponse(respDict)class TmpAudioAPI(Resource): def get(self, filename=None): global gTempAudioFolder log.info("TmpAudioAPI: filename=%s", filename) tmpAudioFullPath = os.path.join(gTempAudioFolder, filename) log.info("tmpAudioFullPath=%s", tmpAudioFullPath) if not os.path.isfile(tmpAudioFullPath): log.warning("Not exists file %s", tmpAudioFullPath) respDict = { "code": 404, "message": "Can not find temp audio file %s" % filename, "data": {} } return jsonify(respDict) fileSize = os.path.getsize(tmpAudioFullPath) log.info("fileSize=%s", fileSize) with open(tmpAudioFullPath, "rb") as tmpAudioFp: fileBytes = tmpAudioFp.read() log.info("read out fileBytes length=%s", len(fileBytes)) outputFilename = filename # contentType = "audio/mp3" # chrome use this contentType = "audio/mpeg" # most common and compatible return sendFile(fileBytes, contentType, outputFilename)api.add_resource(RobotQaAPI, '/qa', endpoint='qa')api.add_resource(TmpAudioAPI, '/tmp/audio/<filename>', endpoint='TmpAudio') |

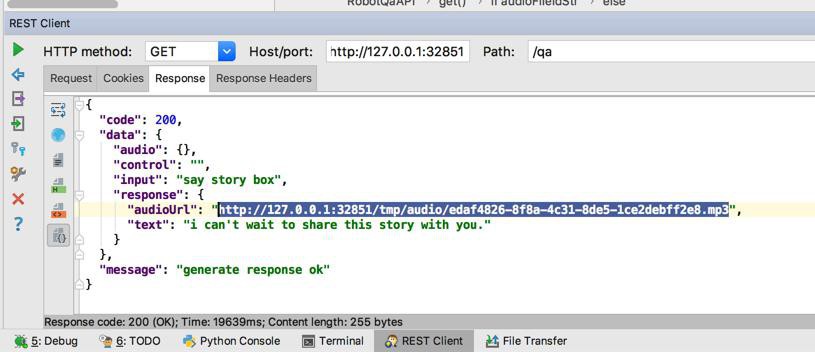

如此即可实现:

访问qa,返回内容中包含了,从百度的语音合成接口返回的二进制的mp3数据,保存到临时文件夹后,并返回生成的本地的Flask的tmp的file的url:

然后再去访问该url

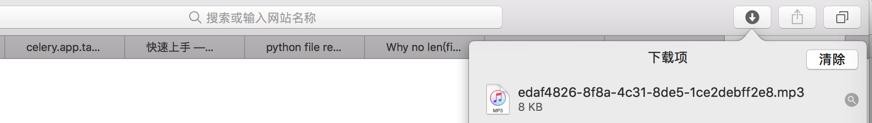

http://127.0.0.1:32851/tmp/audio/edaf4826-8f8a-4c31-8de5-1ce2debff2e8.mp3

可以下载到mp3文件:

剩下的就是:

【未解决】Flask中如何保存临时文件且可以指定有效期

了。