折腾:

【已解决】web端html+js中如何调用麦克风获取用户语音输入说话

期间,去试试,在

navigator.mediaDevices.getUserMedia

之后,使用AudioContext去调用麦克风录音,获取录音数据。

MediaDevices.getUserMedia() – Web APIs | MDN

感觉调试了半天,好像始终无法获取麦克风的语音输入啊:

始终不能出现录音

至少

inputAudio.onloadedmetadata

processor.onaudioprocess

都没有执行到

不过对于代码:

<code>$(document).ready(function(){

function hasGetUserMedia() {

// Note: Opera builds are unprefixed.

return !!(navigator.getUserMedia || navigator.webkitGetUserMedia ||

navigator.mozGetUserMedia || navigator.msGetUserMedia);

}

function detectGetUserMedia(){

console.log(navigator);

console.log("Current browser: vendor=%s, platform=%s, userAgent=%s",

navigator.vendor, navigator.platform, navigator.userAgent);

if (hasGetUserMedia()) {

// Good to go!

console.log("current browser support getUserMedia -> allow user speak use microspeaker");

} else {

console.error('getUserMedia() is not supported in current browser');

}

}

detectGetUserMedia();

var onSuccessGetUserMedia = function(mediaStream) {

console.log("handleSuccess: mediaStream=%o", mediaStream);

var inputAudio = $("#inputAudio");

try {

inputAudio.srcObject = mediaStream;

console.log("inputAudio.srcObject=%o", inputAudio.srcObject);

} catch (error) {

console.log("try set input audio srcObject error=%o", error);

inputAudio.src = window.URL.createObjectURL(mediaStream);

console.log("inputAudio.src=%o", inputAudio.src);

}

inputAudio.onloadedmetadata = function(e) {

console.log("onloadedmetadata: e=%o", e);

console.log("now try play input audio=%o", inputAudio);

inputAudio.play();

};

var audioContext = new AudioContext();

console.log("audioContext=%o", audioContext);

var mediaInputSrc = audioContext.createMediaStreamSource(mediaStream)

console.log("mediaInputSrc=%o", mediaInputSrc);

var processor = audioContext.createScriptProcessor(1024,1,1);

console.log("processor=%o", processor);

mediaInputSrc.connect(processor);

processor.connect(audioContext.destination);

console.log("audioContext.destination=%o", audioContext.destination);

processor.onaudioprocess = function(e) {

console.log("Audio processor onaudioprocess");

// Do something with the data, i.e Convert this to WAV

console.log(e.inputBuffer);

};

};

function testAudioInput(){

console.log("testAudioInput");

navigator.mediaDevices

.getUserMedia({ audio: true, video: false })

.then(onSuccessGetUserMedia)

.catch(function (error) {

console.log("Try get audio input error: %o", error);

//DOMException: Permission denied

});

}

testAudioInput();

}

</code>看到log中输出出现警告,所以去解决:

【已解决】Chrome中js警告:The AudioContext was not allowed to start

然后是获得audio数据了。

然后接着需要去解决,如何把获取到的buffer中的audio数据,保存起来,另存为pcm或者wav等格式的音频,但是暂时没搞懂。

后记:

看到:

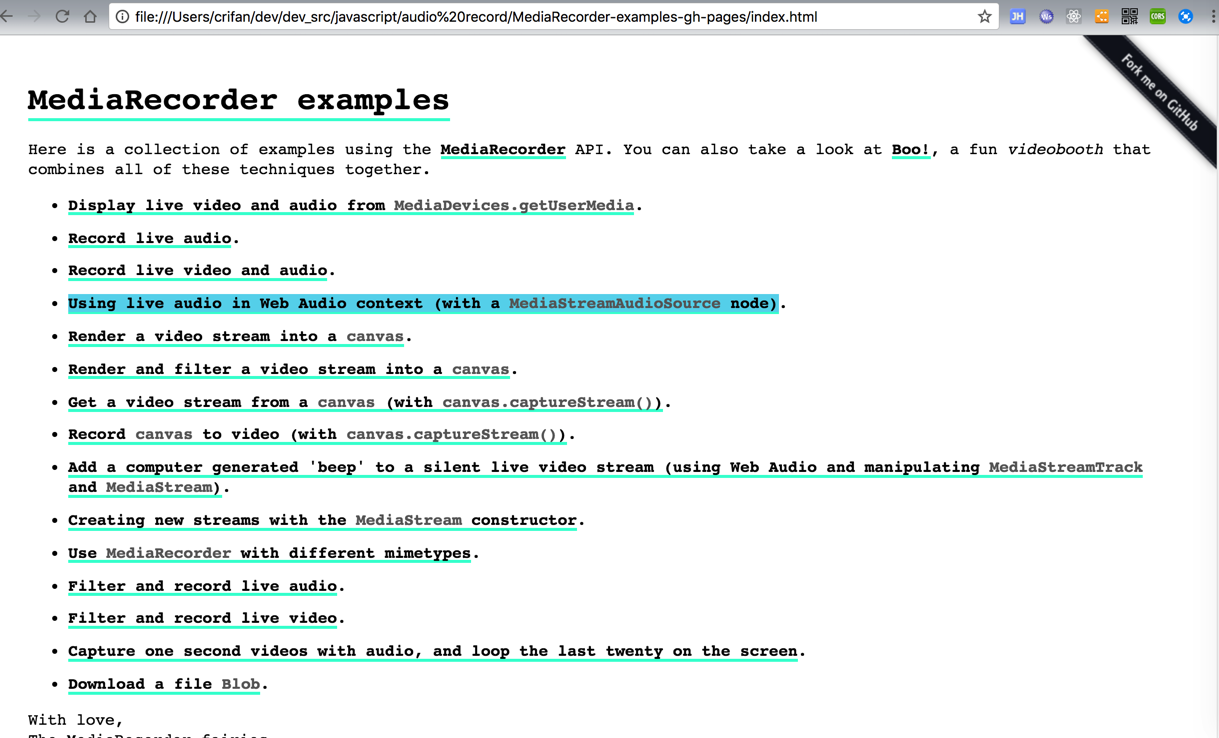

mozdevs/MediaRecorder-examples: MediaRecorder examples

试了demo,发现也有个:

MediaRecorder examples – Get stream from mediaDevices.getUserMedia into a Web Audio context

MediaRecorder-examples-gh-pages/using-live-audio-in-web-audio-context.html

-》

发现代码很简单只是:

<code>// This example uses MediaDevices.getUserMedia to get a live audio stream,

// and then bring it into a Web Audio context using a MediaStreamSourceNode

//

// The relevant functions in use are:

//

// navigator.mediaDevices.getUserMedia -> to get live audio stream from webcam

// AudioContext.createMediaStreamSource -> to create a node that takes a MediaStream

// as input and works as source of sound inside the audio graph

window.onload = function () {

// request audio stream from the user's webcam

navigator.mediaDevices.getUserMedia({

audio: true

})

.then(function (stream) {

var audioContext = new AudioContext();

var mediaStreamNode = audioContext.createMediaStreamSource(stream);

mediaStreamNode.connect(audioContext.destination);

// This is a temporary workaround for https://bugzilla.mozilla.org/show_bug.cgi?id=934512

// where the stream is collected too soon by the Garbage Collector

window.doNotCollectThis = stream;

});

};

</code>就能实现实时把麦克风的录音发送到耳机扬声器中。

以后有空再去研究如何利用AudioContext去录音吧