【背景】

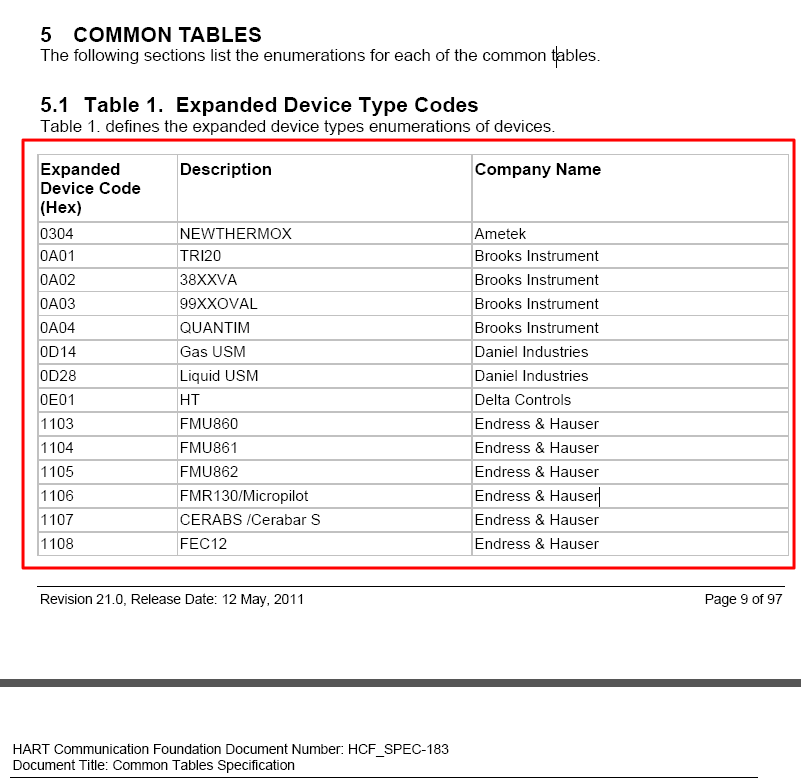

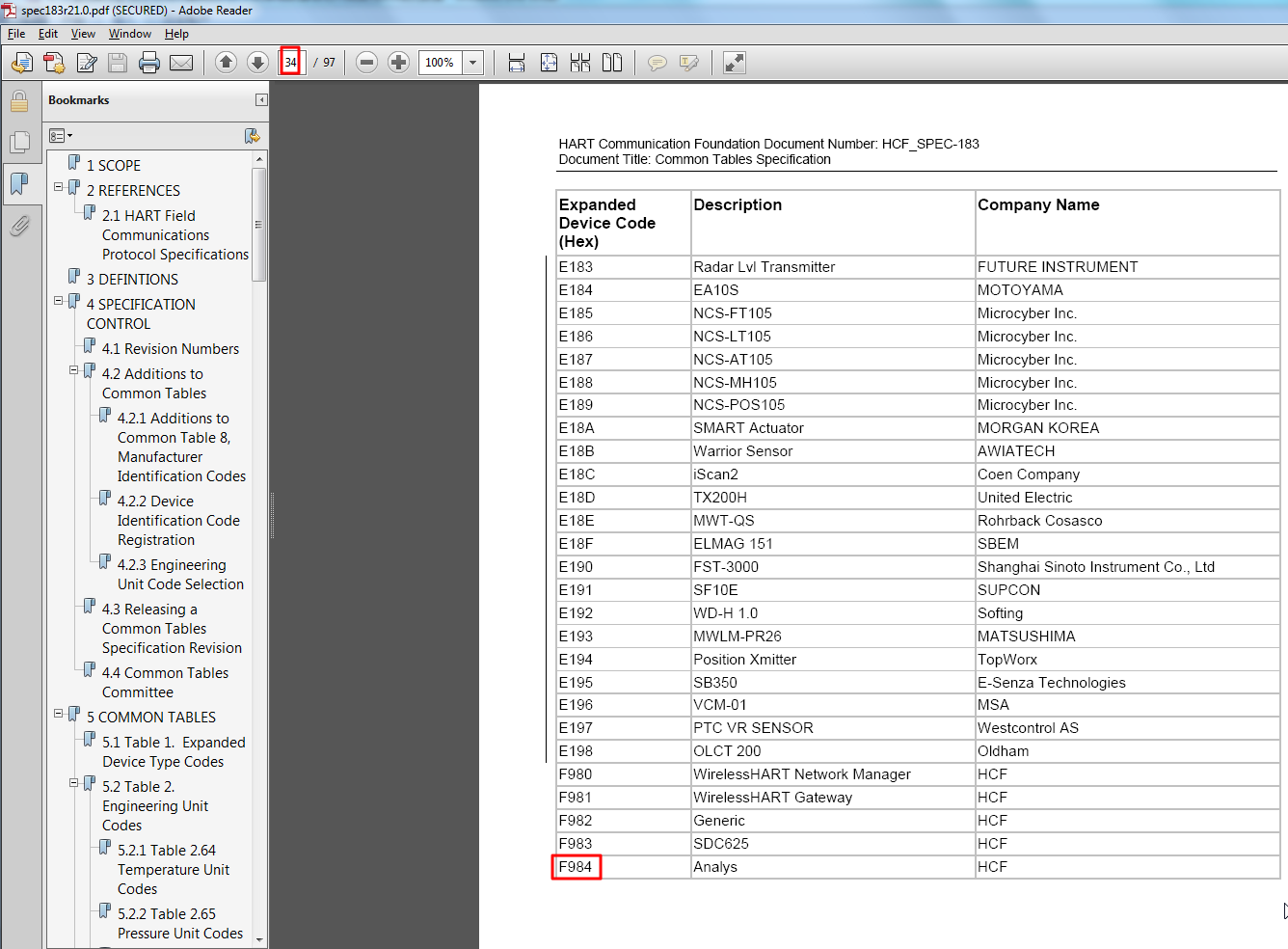

将一个不可拷贝的PDF文档中的表格数据:

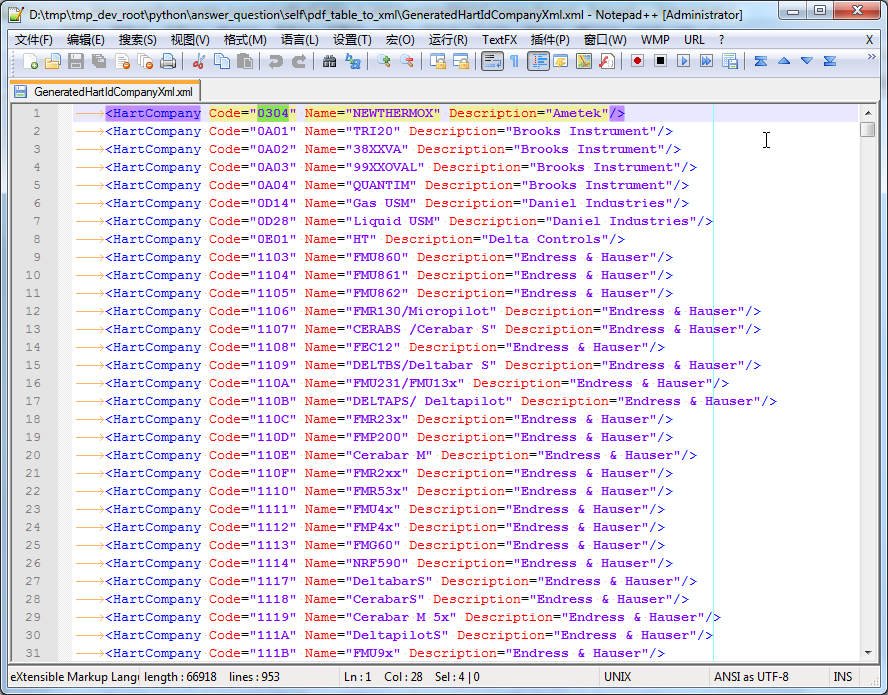

导出来,并且另存为类似如下的xml的格式:

1 2 3 | <HartCompany Code="3004" Name="Flowserve" Description="Logix 3200-IQ"/><HartCompany Code="3601" Name="Yamatake" Description="MagneW"/><HartCompany Code="3602" Name="Yamatake" Description="ST3000"/> |

【折腾过程】

1.PDF无法复制,所以无法拷贝粘贴出来了。

2.暂时手上没有那个之前弄过的,很强大的,可以将pdf转为word文件的那个软件。

记不清叫啥了。反正之前用过,很牛x的。

3.此刻能想到的只能是,写python脚本,处理pdf,抓取数据,存为xml的文本格式。

4.参考了一堆资料:

working on tables in pdf using python – Stack Overflow

Python module for converting PDF to text – Stack Overflow

slate 0.3 : Python Package Index

working on tables in pdf using python – Stack Overflow

pdftables – a Python library for getting tables out of PDF files | ScraperWiki

先后去:

【记录】尝试使用PDFMiner将不可复制的PDF转换为文本或HTML

5.然后再去:

【记录】尝试使用pyPdf将不可复制的PDF转换为文本或HTML

6.然后再去尝试:

【记录】尝试用xpdf将不可复制的PDF转换为文本或HTML

7.最后是用:

【记录】尝试使用pdftohtml将不可拷贝的PDF文件转换为HTML并保留表格的格式

8.所以,接着就真的可以去写Python脚本,去处理html,提取内容,导出为xml了。

其中会用到BeautifulSoup,不熟悉的可参考:

最终实现了效果:

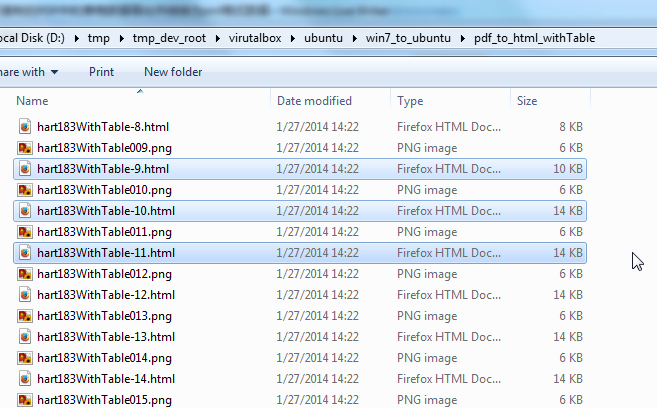

把如下的一堆的从9到34的html:

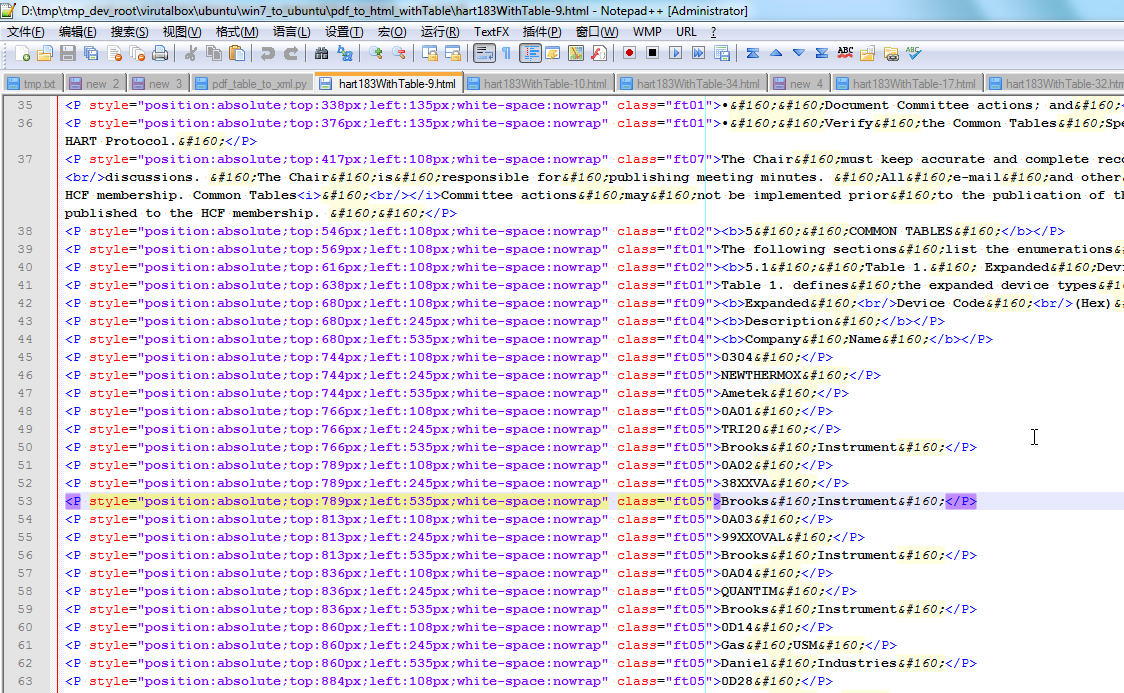

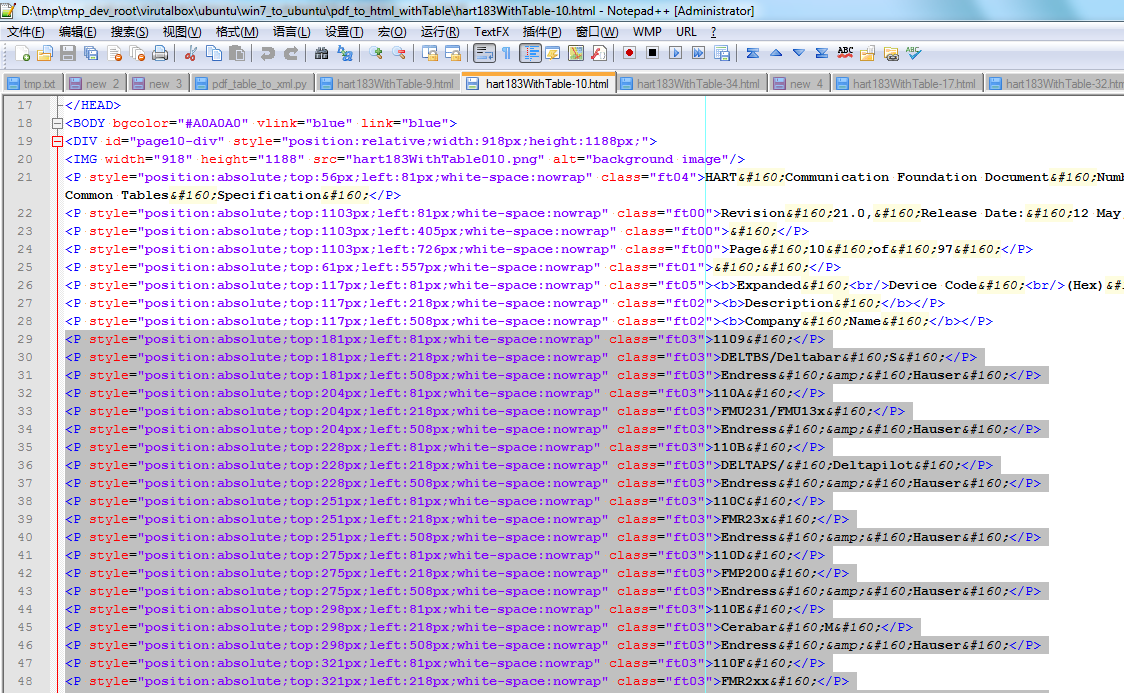

对应html代码为:

ft05的第九页:

后来从第十页的ft03:

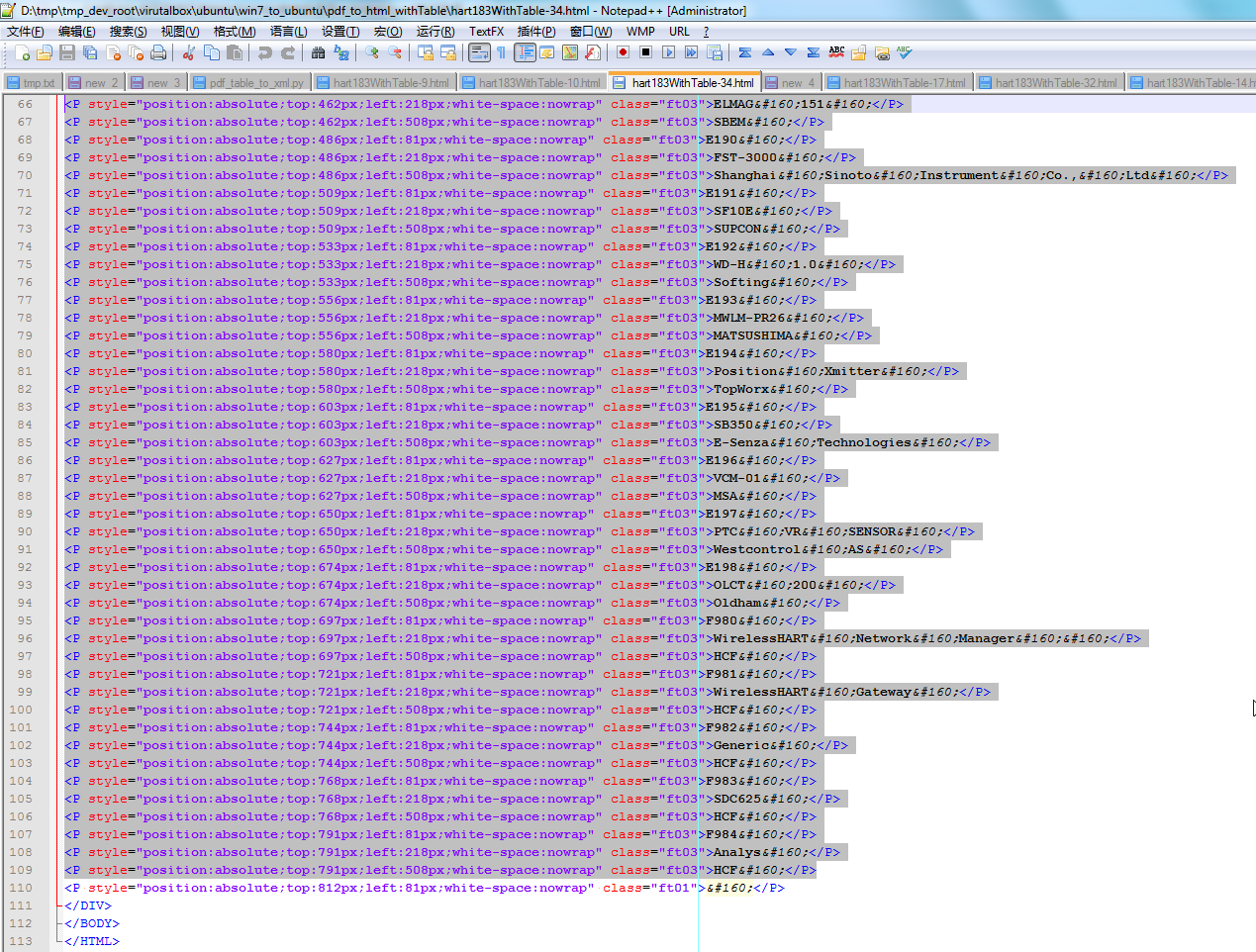

直到最后的第34页也是ft03:

最终用如下的代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 | #!/usr/bin/python# -*- coding: utf-8 -*-"""Function:【未解决】将不可拷贝复制的PDF中的表格数据导出并转换为xml格式数据Author: Crifan LiVersion: 2014-01-27Contact: https://www.crifan.com/about/me"""import osimport sysimport codecsfrom BeautifulSoup import BeautifulSoup;def pdf_table_to_xml(): """Extract data from HTML which is generated from PDF using pdftohtml, then saved to xml""" srcHtmlFolder = "D:\\tmp\\tmp_dev_root\\virutalbox\\ubuntu\\win7_to_ubuntu\\pdf_to_html_withTable" htmlFilenameList = [] baseFilename = "hart183WithTable-" fileSuffix = ".html" #create output file outputXmlFilename = "GeneratedHartIdCompanyXml.xml"; # 'a+': read,write,append # 'w' : clear before, then write #outputXmlFp = codecs.open(outputXmlFilename, 'w') outputXmlFp = codecs.open(outputXmlFilename, 'w', "UTF-8") #generate html file list to process # hart183WithTable-9.html to hart183WithTable-34.html for pageNum in range(9, 35): fullFilename = baseFilename + str(pageNum) + fileSuffix #print "fullFilename=",fullFilename; # fullFilename= hart183WithTable-9.html # fullFilename= hart183WithTable-10.html #fullFilename= hart183WithTable-34.html fullFile = os.path.join(srcHtmlFolder, fullFilename) #print "fullFile=",fullFile srcHtmlFp = open(fullFile) #print "srcHtmlFp=",srcHtmlFp srcHtml = srcHtmlFp.read() #print "srcHtml=",srcHtml foundAllFt = [] paraLineNum = 0 soup = BeautifulSoup(srcHtml, fromEncoding="UTF-8") #hart183WithTable-9.html # <P style="position:absolute;top:744px;left:108px;white-space:nowrap" class="ft05">0304 </P> # <P style="position:absolute;top:744px;left:245px;white-space:nowrap" class="ft05">NEWTHERMOX </P> # <P style="position:absolute;top:744px;left:535px;white-space:nowrap" class="ft05">Ametek </P> # <P style="position:absolute;top:766px;left:108px;white-space:nowrap" class="ft05">0A01 </P> # <P style="position:absolute;top:766px;left:245px;white-space:nowrap" class="ft05">TRI20 </P> # <P style="position:absolute;top:766px;left:535px;white-space:nowrap" class="ft05">Brooks Instrument </P> foundAllFt05 = soup.findAll(name="p", attrs={"class":"ft05"}) #print "foundAllFt05=",foundAllFt05 ft05Len = len(foundAllFt05) print "ft05Len=",ft05Len #hart183WithTable-10.html # <P style="position:absolute;top:181px;left:81px;white-space:nowrap" class="ft03">1109 </P> # <P style="position:absolute;top:181px;left:218px;white-space:nowrap" class="ft03">DELTBS/Deltabar S </P> # <P style="position:absolute;top:181px;left:508px;white-space:nowrap" class="ft03">Endress & Hauser </P> # <P style="position:absolute;top:204px;left:81px;white-space:nowrap" class="ft03">110A </P> # <P style="position:absolute;top:204px;left:218px;white-space:nowrap" class="ft03">FMU231/FMU13x </P> # <P style="position:absolute;top:204px;left:508px;white-space:nowrap" class="ft03">Endress & Hauser </P> #hart183WithTable-34.html # <P style="position:absolute;top:181px;left:81px;white-space:nowrap" class="ft03">E183 </P> # <P style="position:absolute;top:181px;left:218px;white-space:nowrap" class="ft03">Radar Lvl Transmitter </P> # <P style="position:absolute;top:181px;left:508px;white-space:nowrap" class="ft03">FUTURE INSTRUMENT </P> # <P style="position:absolute;top:204px;left:81px;white-space:nowrap" class="ft03">E184 </P> # <P style="position:absolute;top:204px;left:218px;white-space:nowrap" class="ft03">EA10S </P> # <P style="position:absolute;top:204px;left:508px;white-space:nowrap" class="ft03">MOTOYAMA </P> foundAllFt03 = soup.findAll(name="p", attrs={"class":"ft03"}) #print "foundAllFt03=",foundAllFt03 ft03Len = len(foundAllFt03) print "ft03Len=",ft03Len if((ft05Len > 1) and (0 == (ft05Len % 3))): print "+++ ft05 is real table data for ",fullFile paraLineNum = ft05Len foundAllFt = foundAllFt05 elif((ft03Len > 1) and (0 == (ft03Len % 3))): print "+++ ft03 real table data for ",fullFile paraLineNum = ft03Len foundAllFt = foundAllFt03 else: print "--- Not found valid table data for ",fullFile sys.exit(-2) #real start extrat data totalRowNum = paraLineNum/3 print "totalRowNum=",totalRowNum for rowIdx in range(totalRowNum): def postProcessStr(origStr): """do some post process for input str""" processedStr = origStr.replace(" ", " ") #processedStr = processedStr.replace("&", "&") processedStr = processedStr.strip() return processedStr hartCodeSoup = foundAllFt[rowIdx*3 + 0] hartCodeUni = unicode(hartCodeSoup.string) hartCodeUni = postProcessStr(hartCodeUni) hartDescSoup = foundAllFt[rowIdx*3 + 1] hartDescUni = unicode(hartDescSoup.string) hartDescUni = postProcessStr(hartDescUni) hartNameSoup = foundAllFt[rowIdx*3 + 2] hartNameUni = unicode(hartNameSoup.string) hartNameUni = postProcessStr(hartNameUni) # <HartCompany Code="3701" Name="Yokogawa" Description="YEWFLO"/> xmlLineStr = ' <HartCompany Code="' + hartCodeUni + '" Name="' + hartNameUni + '" Description="' + hartDescUni + '"/>' + '\n' #print "xmlLineStr=",xmlLineStr #save data outputXmlFp.write(xmlLineStr) #save and close output file outputXmlFp.flush() outputXmlFp.close()if __name__ == "__main__": pdf_table_to_xml(); |

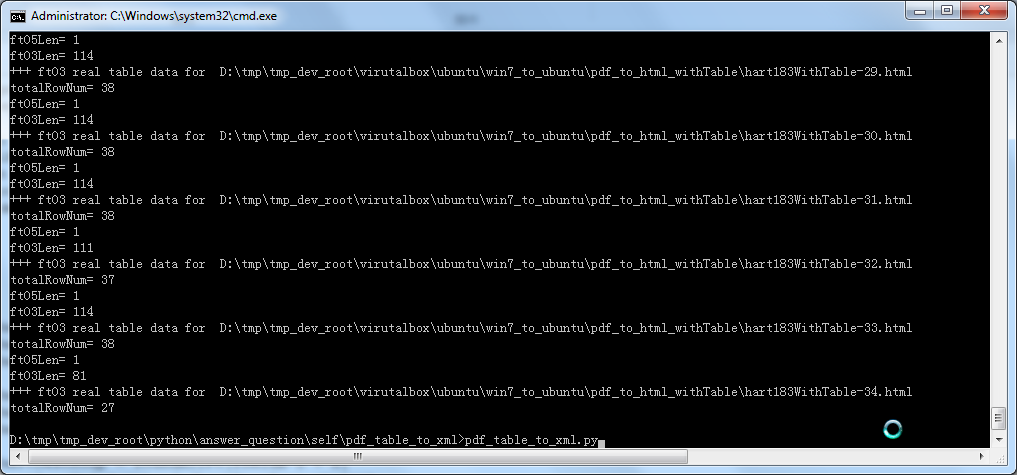

运行:

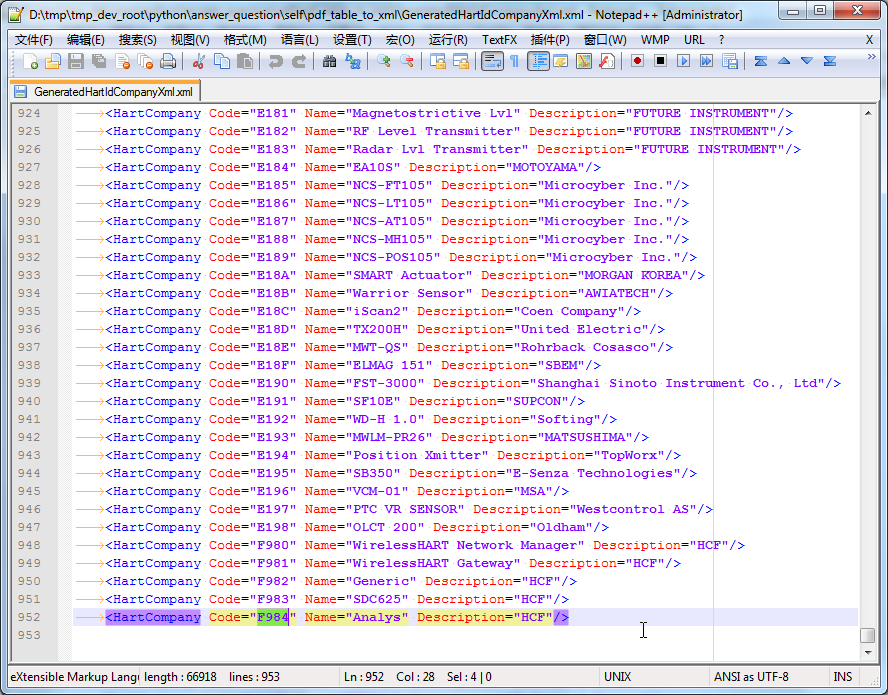

最终生成了对应的xml文件内容:

【总结】

最终是:

通过pdftohtml,把不可拷贝的PDF,导出为html;

再写python脚本,去处理这么一堆的html文件,然后提取其中的数据,导出为xml形式的内容。

注:

1.使用pdftohtml时,要加上-nodrm参数,才能保留表格格式

2.此处,生成的html中有个别的表格内部数据有特殊的,需要手动处理一下,把个别的ft06换成ft03即可。

3.python脚本中,是利用BeautifulSoup去处理html的。其实自己熟悉正则表达式的话,也是可以不用BeautifulSoup而直接用正则去匹配提取所需数据的。