【背景】

Python中,之前一直用BeautifulSoup去解析html的:

【教程】Python中第三方的用于解析HTML的库:BeautifulSoup

后来听说BeautifulSoup很慢,而lxml解析html速度很快,所以打算去试试lxml。

【折腾过程】

1.去lxml主页看了看简介:

lxml – XML and HTML with Python

lxml is the most feature-rich and easy-to-use library for processing XML and HTML in the Python language.IntroductionThe lxml XML toolkit is a Pythonic binding for the C libraries libxml2 and libxslt. It is unique in that it combines the speed and XML feature completeness of these libraries with the simplicity of a native Python API, mostly compatible but superior to the well-known ElementTree API. The latest release works with all CPython versions from 2.4 to 3.3. See the introduction for more information about background and goals of the lxml project. Some common questions are answered in the FAQ. |

还支持python 3.3,不错的。

看到好多个版本,找了最新的一个:

此处是win7 x64,所以下载对应的:

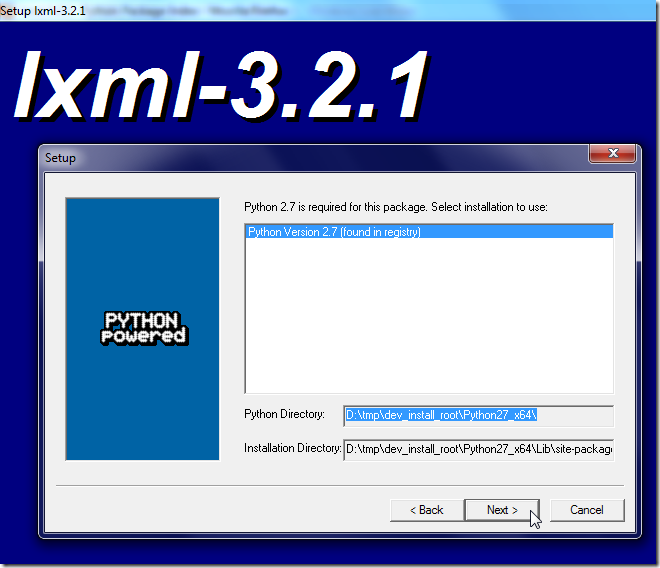

lxml-3.2.1.win-amd64-py2.7.exe

然后继续安装lxml:

3.接下来,就是搞懂如何使用lxml去解析html了。

所以,先去找个,正常的html,比如之前的教程:

中所涉及到的。

4.再参考一堆教程:

最终,写成如下,可以正常运行的代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 | #!/usr/bin/python# -*- coding: utf-8 -*-"""-------------------------------------------------------------------------------[Function]【记录】Python中尝试用lxml去解析html[Date]2013-05-27 [Author]Crifan Li[Contact]-------------------------------------------------------------------------------""" #---------------------------------import---------------------------------------import urllib2;from lxml import etree;#------------------------------------------------------------------------------def main(): """ Demo Python use lxml to extract/parse html """ req = urllib2.Request(userMainUrl); resp = urllib2.urlopen(req); respHtml = resp.read(); #print "respHtml=",respHtml; # you should see the ouput html #more method please refer: #【教程】抓取网并网页中所需要的信息 之 Python版 print "Method 3: Use lxml to extract info from html"; #<h1 class="h1user">crifan</h1> #dom = etree.fromstring(respHtml); htmlElement = etree.HTML(respHtml); print "htmlElement=",htmlElement; #<Element html at 0x2f2a090> h1userElement = htmlElement.find(".//h1[@class='h1user']"); print "h1userElement=",h1userElement; #<Element h1 at 0x2f2a1b0> print "type(h1userElement)=",type(h1userElement); #<type 'lxml.etree._Element'> print "dir(h1userElement)=",dir(h1userElement); # dir(h1userElement)= ['__class__', '__contains__', '__copy__', '__deepcopy__', '__delattr__', '__delitem__', '__doc__', '__format__', '__getattribute__', '__getitem__', '__hash__', # '__init__', '__iter__', '__len__', '__new__', '__nonzero__', '__reduce__', '__reduce_ex__', '__repr__', '__reversed__', '__setattr__', '__setitem__', '__sizeof__', '__str__', '__su # bclasshook__', '_init', 'addnext', 'addprevious', 'append', 'attrib', 'base', 'clear', 'extend', 'find', 'findall', 'findtext', 'get', 'getchildren', 'getiterator', 'getnext', 'get # parent', 'getprevious', 'getroottree', 'index', 'insert', 'items', 'iter', 'iterancestors', 'iterchildren', 'iterdescendants', 'iterfind', 'itersiblings', 'itertext', 'keys', 'make # element', 'nsmap', 'prefix', 'remove', 'replace', 'set', 'sourceline', 'tag', 'tail', 'text', 'values', 'xpath'] print "h1userElement.text=",h1userElement.text; #crifan attributes = h1userElement.attrib; print "attributes=",attributes; #{'class': 'h1user'} print "type(attributes)=",type(attributes); #<type 'lxml.etree._Attrib'> classKeyValue = attributes["class"]; print "classKeyValue=",classKeyValue; #h1user print "type(classKeyValue)=",type(classKeyValue); #<type 'str'> tag = h1userElement.tag; print "tag=",tag; #h1 innerHtml = etree.tostring(h1userElement); print "innerHtml=",innerHtml; #innerHtml= <h1 class="h1user">crifan</h1>###############################################################################if __name__=="__main__": main(); |

一些注释和心得:

(1)关于lxml.etree._Element

可以在

中找到所有的api的解释。

其中就有我们所需要的:

find(self, path, namespaces=None) Finds the first matching subelement, by tag name or path.

Properties attrib

text |

等等内容。

(2)关于find()的解释,参见:

Element.find(): Find a matching sub-element

(3)参考:

11. The art of Web-scraping: Parsing HTML with Beautiful Soup

去折腾了一下,结果是需要额外的BeautifulSoup库,才可以正常运行的。

【总结】

在Python中用lxml解析html,还是需要学习很多lxml中的库的用法的,包括对应的ElementTree等等。

其中:

1.关于教程方面,值得参考的是:

2.关于api方面,值得参考的是:

Python XML processing with lxml

http://lxml.de/FAQ.html#xpath-and-document-traversal

转载请注明:在路上 » 【记录】Python中尝试用lxml去解析html