【背景】

之前别人遇到问题:

用的是R语言,抓取一个特殊的网页:

html中有两个charset

1 2 3 4 5 | <head> <meta http-equiv="Content-Type" content="text/html; charset=gb2312" /> <meta http-equiv="Content-Type" content="text/html; charset=utf-8" /> <meta http-equiv="pragma" content="no-cache"/> <meta http-equiv="cache-control" content="no-cache"/> |

结果出现乱码:

> temp <- getURL("http://www.yiteng365.com/commodity.do?id=5708&ispng=") > k <- htmlParse(temp, asText = TRUE, encoding = ‘gbk’) > a <- sapply(getNodeSet(doc = k, path = "//div[@class = ‘goodsname’]"), xmlValue) > a [1] "脜漏路貌脡陆脠陋(脝脮脥篓赂脟)550ml" 用xpath 定位 提取产品名称 |

其中,换用一号店的的页面,是正常的:

temp <- getURL("http://item.yhd.com/item/1989388?ref=1_1_51_search.keyword_1") > product_name <- sapply(getNodeSet(doc = k, path = "//h1[@class = ‘prod_title’ and @id = ‘productMainName’]"), xmlValue) > product_name [1] "丝蓓绮奢耀焕活洗发露750ml(资生堂授权特供) " 相同代码 1号店的网页就没问题。。 |

现在去试试R语言。

【折腾过程】

1.找到主页:

去下载:

http://cran.r-project.org/mirrors.html

->

http://mirrors.ustc.edu.cn/CRAN/

-》

->

http://mirrors.ustc.edu.cn/CRAN/bin/windows/base/

-》

等待下载。。。

2.顺便去了解一下什么是R:

R是门语言和环境,专门用于统计计算和图形的。

3.下载到R-3.0.2-win.exe后再去安装:

4.然后继续去试试:

再参考:

中的:

去双击桌面的快捷方式去运行:

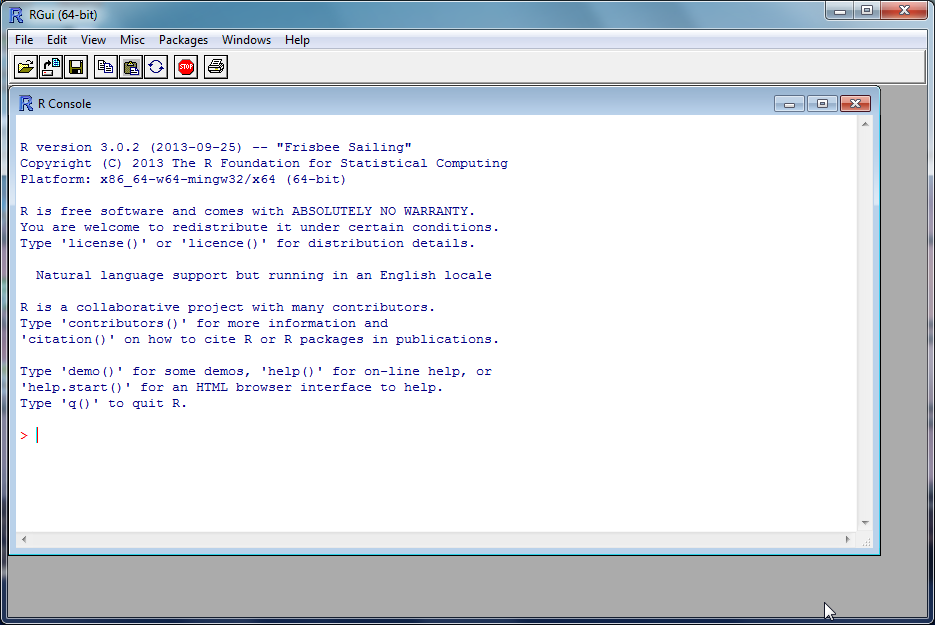

启动后看到GUI窗口:

5.然后就是去搞清楚上面的

htmlParse

是R语言内置的还是第三方的库,所以去搜:

htmlParse R language

参考:

R语言读取淘宝的单品页的名称和价格 – R中国用户组-炼数成金-Dataguru专业数据分析社区

貌似是内置的XML库中的函数。

所以直接去试试代码,结果出错了:

【已解决】运行R语言出错:Error: could not find function "getURL"

然后继续去试试。

6.然后又是找不到htmlParse,所以再去解决:

【已解决】R语言出错:Error: could not find function "htmlParse"

7.接下来,能想到的是:

需要找到,如何查看刚安装好的R语言的XML库的htmlParse的语法。

即找到对应的帮助手册,看函数解释才行:

后记:

后来是看到,实际上是,RGui中输入对应的:

1 2 | > help("htmlParse")starting httpd help server ... done |

即可启动帮助文档的。

8.继续测试:

1 2 3 4 | > productTitle = sapply(getNodeSet(doc = parsedHtml, path="//div[class='goodsname']"), xmlValue)> productTitlelist()> |

很明显不对,结果为空啊。

9.重新使用原先那人的代码去试试:

果然是乱码:

1 2 3 4 5 6 7 8 9 10 11 12 13 | > required("XML")Error: could not find function "required"> require("XML")Loading required package: XML> require("RCurl")Loading required package: RCurlLoading required package: bitops> temp <- getURL("http://www.yiteng365.com/commodity.do?id=5708&ispng=")> k <- htmlParse(temp, asText = TRUE, encoding = 'gbk')> a <- sapply(getNodeSet(doc = k, path = "//div[@class = 'goodsname']"), xmlValue)> a[1] "农夫山泉(普通盖)550ml"> |

接下来,就是去尝试,找到乱码的根源并解决。

去看了看htmlParse中的encoding的解释:

a character string (scalar) giving the encoding for the document. This is optional as the document should contain its own encoding information. However, if it doesn’t, the caller can specify this for the parser. If the XML/HTML document does specify its own encoding that value is used regardless of any value specified by the caller. (That’s just the way it goes!) So this is to be used as a safety net in case the document does not have an encoding and the caller happens to know theactual encoding. |

意思是:

如果HTML中本身已经指定了编码

(此处就是,但是有2个charset。。。前一个是GB2312,后一个是UTF-8)

那么就会 强制 使用HTML中内部指定的编码

而忽略调用者(此处我们的代码所传入的GBK)

所以很明显:

是htmlParse的设计者,自己脑残:

觉得调用者都是傻瓜

即使调用者指定了正确的HTML的编码

结果也还是使用HTML内部自己所指定的错误的编码(此处应该就是用了第二个charset,即UTF-8来解析的)

从而导致乱码的。

9.找到原因了,接着再看看是否能解决掉,或规避掉此(应该算是)bug。

看到还有个参数isHtml:

1 2 3 | isHTML a logical value that allows this function to be used for parsing HTML documents. This causes validation and processing of a DTD to be turned off. This is currently experimental so that we can implement htmlParse with this same function. |

所以去试试,还是不行:

1 2 3 4 | > k <- htmlParse(temp, asText = TRUE, encoding = 'gbk', isHTML=TRUE)> a[1] "农夫山泉(普通盖)550ml"> |

10.去掉asText试试:

也还是不行:

1 2 3 | > k <- htmlParse(temp, encoding = 'gbk', isHTML=TRUE)> a[1] "农夫山泉(普通盖)550ml" |

【总结】

算了,懒得再继续研究了。

目前对于上述特殊包含了两个charset的页面:

http://www.yiteng365.com/commodity.do?id=5708&ispng=

用R语言中的XML库中的htmlParse解析,

虽然指定了正确的GBK(或GB2312)编码,但还是出现乱码

原因是:

htmlParse的实现,有点变态

如果html中有(用charset)指定编码,则忽略你所输入的参数encoding

从而导致:

此处特殊页面,包含两个charset,而htmlParse误用了后一个UTF-8,从而导致(用UTF-8去解析实际上是GB2312/GBK,从而出现)乱码

解决办法:

暂时没有。没法规避此变态的设计。

【后记1】

1.不过后来通过RGui中输入

k

temp

发现是:

temp中本身就是乱码的:

1 | [1] "\r\n\r\n\r\n\r\n\r\n<!DOCTYPE html PUBLIC \"-//W3C//DTD XHTML 1.0 Transitional//EN\" \"http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd\">\r\n<html xmlns=\"http://www.w3.org/1999/xhtml\">\r\n<head>\r\n <meta http-equiv=\"Content-Type\" content=\"text/html; charset=gb2312\" />\r\n <meta http-equiv=\"Content-Type\" content=\"text/html; charset=utf-8\" />\r\n\t<meta http-equiv=\"pragma\" content=\"no-cache\"/>\r\n\t<meta http-equiv=\"cache-control\" content=\"no-cache\"/>\r\n <meta name=\"keywords\" content=\"³É¶¼ ÒÁÌÙ Ñó»ªÌà ÍøÂç ³¬ÊÐ Íø¹º ÔÚÏß¹ºÎï ËÍ»õÉÏÃÅ »õµ½¸¶¿î\"/>\r\n <meta name=\"description\" content=\"×÷ΪÒÁÌÙÑó»ªÌÃʵÌ峬ÊеÄÑÓÉ죬ΪÄúÌṩ·½±ã¡¢¿ì½Ý¡¢¸ßÆ·ÖʵÄÍøÂ繺Îï·þÎñ£¬³Ð½Ó³É¶¼ÈƳǸßËÙ¹«Â·ÄÚµÄÅäËÍÒµÎñ£¬»õµ½¸¶¿î¼°3»·ÄÚ½ð¶î³¬¹ý100ÃâÊÕÅäËÍ·Ñ\"/>\r\n <title>Å©·òɽȪ(ÆÕͨ¸Ç)550ml-ÒÁÌÙÑó»ªÌÃ-ÍøÂ糬ÊÐ</title>\r\n <link href=\"/commodityNew/css/commodity.css\" rel=\"stylesheet\" type=\"text/css\" />\r\n <link href=\"/public/css/style.css\" rel=\"stylesheet\" |

所以,看来需要去研究研究最开始的那个getUrl了。

2.所以去看看其语法:

http://127.0.0.1:28708/library/RCurl/html/getURL.html

getURL {RCurl} Download a URIDescriptionThese functions download one or more URIs (a.k.a. URLs). It uses libcurl under the hood to perform the request and retrieve the response. There are a myriad of options that can be specified using the … mechanism to control the creation and submission of the request and the processing of the response.

The request supports any of the facilities within the version of libcurl that was installed. One can examine these via

UsagegetURL(url, ..., .opts = list(),

write = basicTextGatherer(.mapUnicode = .mapUnicode),

curl = getCurlHandle(), async = length(url) > 1,

.encoding = integer(), .mapUnicode = TRUE)

getURI(url, ..., .opts = list(),

write = basicTextGatherer(.mapUnicode = .mapUnicode),

curl = getCurlHandle(), async = length(url) > 1,

.encoding = integer(), .mapUnicode = TRUE)

getURLContent(url, ..., curl = getCurlHandle(.opts = .opts), .encoding = NA,

binary = NA, .opts = list(...),

header = dynCurlReader(curl, binary = binary,

baseURL = url, isHTTP = isHTTP,

encoding = .encoding),

isHTTP = length(grep('^[[:space:]]*http', url)) > 0)Arguments

a string giving the URI

named values that are interpreted as CURL options governing the HTTP request.

a named list or

if explicitly supplied, this is a function that is called with a single argument each time the the HTTP response handler has gathered sufficient text. The argument to the function is a single string. The default argument provides both a function for cumulating this text and is then used to retrieve it as the return value for this function.

the previously initialized CURL context/handle which can be used for multiple requests.

a logical value that determines whether the download request should be done via asynchronous,concurrent downloading or a serial download. This really only arises when we are trying to download multiple URIs in a single call. There are trade-offs between concurrent and serial downloads, essentially trading CPU cycles for shorter elapsed times. Concurrent downloads reduce the overall time waiting for

an integer or a string that explicitly identifies the encoding of the content that is returned by the HTTP server in its response to our query. The possible strings are ‘UTF-8’ or ‘ISO-8859-1’ and the integers should be specified symbolically as

a logical value that controls whether the resulting text is processed to map components of the form \uxxxx to their appropriate Unicode representation.

a logical value indicating whether the caller knows whether the resulting content is binary (

this is made available as a parameter of the function to allow callers to construct different readers for processing the header and body of the (HTTP) response. Callers specifying this will typically only adjust the call to The caller can specify a value of

a logical value that indicates whether the request an HTTP request. This is used when determining how to process the response. |

所以去试试那个encoding参数:

1 | > temp <- getURL("http://www.yiteng365.com/commodity.do?id=5708&ispng=", .encoding="GBK") |

结果返回的temp还是乱码。。。

3.再去试试:

1 | temp2 <- getURL("http://www.yiteng365.com/commodity.do?id=5708&ispng=", encoding="GB2312") |

错误依旧。

4.加上binary也还是错误依旧:

1 2 3 4 5 6 7 | > temp4 <- getURL("http://www.yiteng365.com/commodity.do?id=5708&ispng=", encoding="GB2312", binary=TRUE)Warning message:In mapCurlOptNames(names(.els), asNames = TRUE) : Unrecognized CURL options: binary> temp4[1] ".................\r\n <meta http-equiv=\"Content-Type\" content=\"text/html; charset=gb2312\" />\r\n <meta http-equiv=\"Content-Type\" content=\"text/html; charset=utf-8\" />\r\n\t<meta http-equiv=\"pragma\" content=\"no-cache\"/>\r\n\t<meta http-equiv=\"cache-control\" content=\"no-cache\"/>\r\n <meta name=\"keywords\" content=\"³É¶¼ ÒÁÌÙ Ñó»ªÌà ÍøÂç ³¬ÊÐ Íø¹º ÔÚÏß¹ºÎï ËÍ»õÉÏÃÅ »õµ½¸¶¿î\"/>\r\n <meta name=\"description\" content=\"×÷ΪÒÁÌÙÑó»ªÌÃʵÌ峬ÊеÄÑÓÉ죬ΪÄúÌṩ·½±ã¡¢¿ì½Ý¡¢¸ßÆ·ÖʵÄÍøÂ繺Îï·þÎñ£¬³Ð½Ó³É¶¼ÈƳǸßËÙ¹«Â·ÄÚµÄÅäËÍÒµÎñ£¬»õµ½¸¶¿î¼°3»·ÄÚ½ð¶î³¬¹ý100ÃâÊÕÅäËÍ·Ñ\"/>\r\n <title>Å©·òɽȪ(ÆÕͨ¸Ç)550ml-ÒÁÌÙÑó»ªÌÃ-ÍøÂ糬ÊÐ</title>\r\n <.......\n"> |

【总结】

getURL,即使设置了encoding参数为GBK或GB2312,也还是会乱码。

暂时无解。

转载请注明:在路上 » 【记录】尝试用R语言去抓取网页和提取信息